Enterprise-Class Context Engineering: From Context Graphs to Production AI

- Arindom Banerjee

- 2 days ago

- 13 min read

Updated: 2 days ago

Context Graphs are necessary. UCL makes them work—with systems that consume, learn, and act. That's how agents become truly autonomous.

Technical Report — January 2026

The problem — and the solution — in one picture. A supplier delays, risk scores spike, and a deadline approaches. Without UCL: siloed data, 2-3 day disputes, ungoverned pipelines, no audit trail. With UCL: four sources fuse into one substrate, situation analysis scores and routes the signal, three copilots receive governed context packs, and the Evidence Ledger captures every decision — from signal detection at 08:15 to on-time delivery at Day+4.

Executive Summary

Enterprise AI is failing at scale. According to S&P Global Market Intelligence, 42% of companies abandoned most AI initiatives in 2025—up from 17% one year prior. Industry analysis of Fortune 2000 deployments shows 87% of enterprise RAG implementations fail to meet ROI targets. Agents fragment context and write back to operational systems without contracts.

The failure point is not model capability. Foundation models have reached remarkable sophistication. The failure point is context—fragmented, ungoverned, and treated as a byproduct rather than engineered infrastructure.

Context Graphs have emerged as a compelling thesis—structured representations of enterprise knowledge enabling AI to reason, not just retrieve. But Context Graphs as a data structure are necessary, not sufficient. The hard problems remain unsolved:

Agent Decision-Making: Agents must analyze situations and decide—not follow scripts. This requires systems that CONSUME graphs: traverse, reason, evaluate options, determine responses. Without this, agents cannot be truly autonomous.

Agent Production-ization: Deployments must evolve at runtime. This requires systems that OPERATE on graphs: create, merge, alter, connect—accumulating intelligence with every execution. Without this, agents freeze after ship and decay in production.

Enterprise Integration: Enterprises have invested decades in ERP, EDW, process mining, ITSM. These contain the signals and institutional knowledge agents need. Any solution must integrate these investments, not ignore them.

Dumping metadata into Neo4j doesn't make agents work. You need consumption structures (systems that read), mutation operations (systems that write back), and governed activation (systems that act safely). That's what separates truly autonomous agents from scripted copilots.

This report presents the Unified Context Layer (UCL) as the leading architecture for enterprise-class context engineering—the architecture that operationalizes Context Graphs and enables truly autonomous agents operating within enterprise governance.

1. Context Engineering: The Formal Discipline

1.1 Definition and Scope

Context Engineering is the systematic design, optimization, and governance of all information provided to large language models at inference time. This definition emerges from a survey of over 1,400 research papers (arXiv 2507.13334), establishing Context Engineering as a formal discipline transcending simple prompt design.

The scope encompasses: system instructions and prompts, conversation history and memory, retrieved information, tool definitions and schemas, structured output constraints, and governance mechanisms.

The critical insight: LLMs function analogously to a new category of operating system. The model serves as processor; the context window functions as working memory. Context Engineering manages what occupies that window at each inference step.

[GRAPHIC 1: Context Engineering Taxonomy — Three-layer stack showing Context Management, Context Processing, Context Retrieval & Generation]

1.2 The Comprehension-Generation Asymmetry

A critical finding: models demonstrate remarkable proficiency understanding complex contexts but struggle to generate equally sophisticated outputs. On the GAIA benchmark, human respondents achieve 92% success rates versus approximately 15% for GPT-4 equipped with plugins (arXiv 2311.12983). This is synthesis failure, not retrieval failure.

The implication: more context is not sufficient. The architecture of how context is structured, sequenced, and presented determines whether models can translate understanding into effective autonomous action.

2. Architectural Approaches in the Industry

Four architectural frameworks have emerged. Each addresses different aspects—and each has specific limitations that prevent truly autonomous agent operation.

2.1 Model Context Protocol (MCP): The Integration Standard

Anthropic's MCP addresses the N×M integration problem, establishing a universal protocol decoupling AI applications from data sources. The ecosystem includes 75+ production connectors and was donated to the Linux Foundation's Agentic AI Foundation in December 2025.

Limitation: MCP solves connection, not governance. It enables integration but does not enforce semantics, situation analysis, or governed activation. Agents can connect to data but cannot reason autonomously over it.

2.2 Google ADK: Context as Compiled View

Google's Agent Development Kit frames context as a compiled view—Sources → Compiler Pipeline → Compiled View. It addresses three pressures: cost/latency spiraling, signal degradation, and reasoning drift.

[GRAPHIC 2: ADK Architecture — Sources → Compiler Pipeline → Compiled View]

Limitation: ADK provides compilation discipline but does not address process-tech fusion, multi-consumption support, or governed activation. Agents receive better context but still follow predetermined paths.

2.3 Agentic Context Engineering (ACE): Self-Improving Playbooks

The ACE framework (arXiv 2510.04618) treats contexts as evolving playbooks—Generator → Reflector → Curator. It achieves +10.6% improvement on agent benchmarks and reduces adaptation latency by 82-91%.

[GRAPHIC 3: ACE Architecture — Generator → Reflector → Curator loop around Evolving Playbook]

Limitation: ACE enables self-improvement but requires an enterprise substrate to improve against. Without governed context from enterprise systems, what do playbooks learn from? Agents improve in isolation, disconnected from enterprise reality.

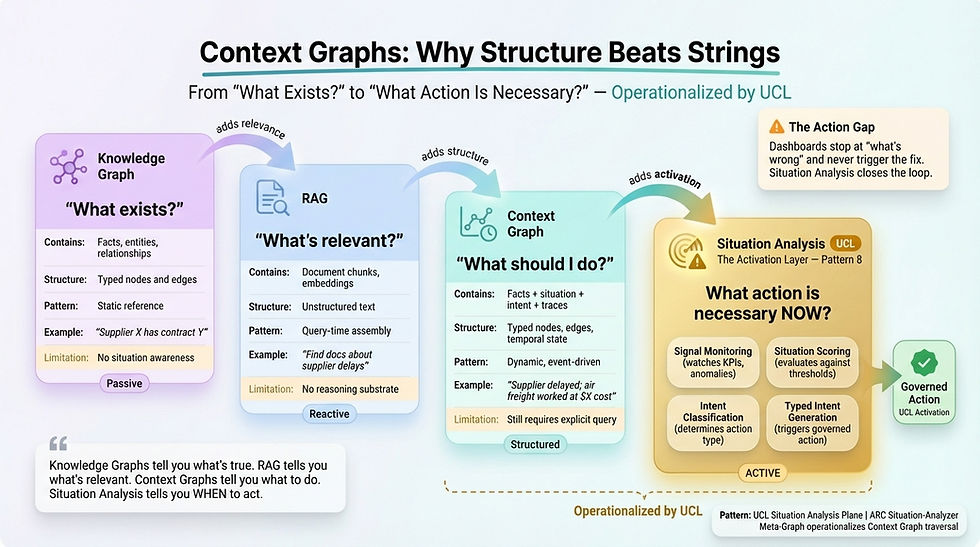

2.4 Context Graphs: Necessary But Not Sufficient

Foundation Capital's "Context Graphs" thesis identifies a trillion-dollar opportunity: graphs that capture not just state but decision traces—why decisions were made, what exceptions were approved, what precedents exist.

This is compelling—but Context Graphs as a data structure don't solve the hard problems:

The Agent Decision-Making Problem: Agents must analyze situations and decide—not follow scripts. Data in Neo4j doesn't do this. You need a Situation Analyzer—a system that consumes the graph and produces decisions. Without this, agents cannot handle novel situations; they escalate to humans. The human remains the bottleneck.

The Agent Production-ization Problem: Deployments must improve at runtime. A graph database stores data. You need runtime evolution systems that write back learnings—CREATE new patterns, MERGE entities, ALTER relationships, CONNECT decision traces. Without this, agents freeze after deployment and performance decays.

The Enterprise Integration Problem: Context engineering approaches that ignore existing IT infrastructure miss a fundamental reality. Enterprises have decades of investment in ERP, EDW, process mining, ITSM. These systems contain the signals and institutional knowledge agents need. Any architecture that doesn't integrate them fails to capture enterprise synergy.

[GRAPHIC 4: Context Graphs: Why Structure Beats Strings — From "What Exists?" to "What Action Is Necessary?" — Operationalized by UCL]

3. What Makes Context Engineering "Enterprise-Class"

Enterprise-class context engineering requires seven dimensions without which agents cannot operate reliably—or autonomously—in production.

[GRAPHIC 5: Enterprise-Class Context Engineering — The Seven Dimensions Required for Production AI Systems]

Dimension | Requirement |

Enterprise Signal Aggregation | Context from ERP, EDW, process mining, ITSM, CRM—not just documents |

Process-Tech Fusion | Process intelligence joined with technology data under shared contracts |

Multi-Consumption Support | One substrate for BI, ML, RAG, Agents—no semantic forks |

DataOps & Governance-as-Code | Contracts, fail-closed gates, auto-rollback |

Meta-Graph for Reasoning | Context Graphs operationalized with consumption and mutation |

Situation Analysis | Systems that consume graphs to analyze, decide, and enable autonomous action |

Governed Activation | Pre-write validation, rollback, evidence capture |

Missing any dimension breaks the system—and prevents agents from achieving true autonomy.

4. UCL: The Leading Architecture for Enterprise-Class Context Engineering

The Unified Context Layer (UCL) delivers all seven dimensions, solves the hard problems that Context Graphs alone cannot, and enables truly autonomous agents operating within enterprise governance.

4.1 The Six Paradigm Shifts

Shift 1: Context becomes a governed product. Context Packs are versioned with evaluation gates (answerable@k ≥90%, cite@k ≥95%, faithfulness ≥95%).

Shift 2: Heterogeneous sources unify. Process intelligence, ERP, web signals converge through a common semantic layer.

Shift 3: Metadata becomes a reasoning substrate. The Meta-Graph is Context Graphs operationalized.

Shift 4: One substrate serves all consumption models. S1 (BI), S2 (ML), S3 (RAG), S4 (Agents), Activation.

Shift 5: Activation closes the governed loop. Pre-write validation, separation of duties, rollback, Evidence Ledger.

Shift 6: Situation analysis enables autonomous action. Agents reason over context, analyze situations, and decide—not follow scripts. This is what makes agents truly autonomous.

4.2 The Eight Patterns

Pattern | Function | Enterprise-Class Dimension |

1. Multi-Pattern Ingestion | Batch, streaming, CDC, process mining, web | Enterprise Signal Aggregation |

2. Connected DataOps | Contracts-as-code, fail-closed gates | DataOps & Governance |

3. StorageOps | Warehouses, lakehouses, vector stores | DataOps & Governance |

4. Semantic Layer | One KPI truth, anti-forking | Multi-Consumption Support |

5. Meta-Graph | LLM-traversable knowledge graph | Meta-Graph for Reasoning |

6. Process-Tech Fusion | Celonis/Signavio joined with ERP | Process-Tech Fusion |

7. Consumption Structures | S1, S2, S3, S4, Activation serve ports | Multi-Consumption Support |

8. Situation Analyzer | THE GATEWAY — situation scoring, governed action | Situation Analysis |

Together, these patterns create the governed substrate. But the substrate alone is not the end state. What makes UCL transformative is how this substrate feeds and enables a compounding architecture—and how that architecture enables truly autonomous agents.

[GRAPHIC 6: UCL Substrate Architecture: The Agentic Gateway — Situation Analysis Plane (Pattern 8) at top, Control Plane, Metadata Plane, Data/Serving Plane below]

4.3 The Compounding Architecture

[GRAPHIC 7: Why We Win: The Compounding Moat — UCL feeding structure, Agent Engineering feeding intelligence, both loops reading from and writing back to Accumulated Semantic Graphs]

Integrating Existing Enterprise Investments

UCL treats existing enterprise systems as first-class context sources. ERP provides transactional truth. Process mining provides operational intelligence. EDW provides historical patterns. ITSM provides system state. These investments don't get replaced—they get connected under shared contracts, enabling agents to reason across enterprise-wide context and delivering new ROI on existing infrastructure.

The Accumulated Semantic Graphs

At the center of the architecture: accumulated semantic graphs that receive inputs from two directions. UCL feeds structure—entity definitions, KPI contracts, process patterns, exception taxonomies. Runtime systems feed intelligence—execution traces, resolution patterns, outcome evaluations, learned behaviors. The graphs are not static data stores. They are living substrates that grow smarter with every workflow.

Two Compounding Loops

Pattern 8 (Situation Analyzer) is THE GATEWAY—but it operates within a dual-loop architecture:

Loop 1 — Smarter within each run: Runtime evolution optimizes deployments continuously. Routing rules, prompt modules, and tool constraints adjust based on outcomes. Learnings write back to the graphs: CREATE new patterns, MERGE duplicates, ALTER relationships.

Loop 2 — Smarter across runs: Situation analysis reasons over accumulated patterns to assess, classify, and decide. Decision traces write back for future learning. Each workflow enriches the substrate the next one inherits.

Both loops READ from and WRITE BACK to the accumulated semantic graphs. Context feeds both. Every workflow makes the next one smarter. This is what separates truly autonomous agents from scripted copilots—they learn, they adapt, they handle novel situations.

Governed Activation Closes the Loop

Reasoning without action is incomplete. UCL's governed activation ensures that autonomous decisions translate into safe, auditable, reversible action:

Pre-write validation confirms schema authority and idempotent keys

Separation of duties enforces approval workflows for sensitive writes

Rollback capability ensures any action can be reversed (target: ≤5 minutes)

Evidence Ledger captures the complete chain: signal → context → decision → action → outcome

This is what enables enterprise outcomes—DPO ↓11 days, OTIF 87%→96%, MTTR in minutes not hours. Not insights that stop at dashboards—autonomous agents taking governed action with evidence.

One Governed Substrate, Many Autonomous Copilots

The power of enterprise-class context engineering becomes clear at scale. Build the substrate once—deploy many copilots against it. Each copilot inherits the full context infrastructure: enterprise signals fused, contracts enforced, situation analysis available, governed activation ready. No copilot starts from zero. Every copilot benefits from the intelligence accumulated by all the others.

[GRAPHIC 10: Context Engineering: One Governed Substrate, Many Autonomous Copilots — showing Context Engineering Substrate with Data Plane, Control Plane, Activation Plane feeding Major Incident Response, Service Desk Triage, Access Fulfillment, Financial Close, OTIF Rescue,

Procurement Variance, and Net Effect copilots]

This is the "build once, deploy many" value proposition: Major Incident Response shares context infrastructure with Financial Close Reconciliation. OTIF Rescue inherits the same governed activation as Procurement Variance Guardian. The substrate compounds; each copilot accelerates the next.

5. Industry Use Cases: UCL vs. Alternative Approaches

Three scenarios demonstrate why enterprise-class context engineering requires UCL—and why alternatives cannot enable truly autonomous agents.

5.1 Invoice Exception Concierge (Source-to-Pay)

Scenario: Blocked invoice—3-way match failed. Need to: pull contract terms, identify root cause, decide resolution, execute, capture evidence.

Approach | Where It Breaks |

MCP | Connects to SAP, Oracle. No situation analysis to decide auto-approve vs escalate. No governed write-back. Agent escalates to human. |

ADK | Compiles context. No governed activation to resolve in SAP. No evidence trail. Agent recommends; human acts. |

ACE | Learns patterns. Against what? Without contract terms and supplier history, patterns don't ground. Agent learns in isolation. |

Context Graphs | Stores data. What system decides? What writes back? What captures evidence? Data exists; agent can't use it autonomously. |

UCL | Meta-Graph has contracts + tolerances + history. Situation Analyzer decides. Governed activation writes back. Evidence Ledger captures chain. Agent resolves autonomously. |

Result with UCL: DPO ↓11 days. $27M working capital freed. Autonomous resolution—exceptions escalate; everything else runs.

5.2 OTIF Recovery (Supply Chain)

Scenario: OTIF drops. Need to: fuse ERP with process mining, identify root cause, decide remediation, execute, prove it worked.

Approach | Where It Breaks |

MCP | Connects systems. Signals don't join—no semantic fusion. Agent sees fragments. |

ADK | Compiles context. No process-tech fusion. Agent can't decide "expedite." |

ACE | Learns patterns without connection to current carrier, inventory, weather. Learning is ungrounded. |

Context Graphs | Stores routes, variants. What fuses? What decides? What executes? Agent can't act. |

UCL | Process-Tech Fusion joins Celonis with ERP. Meta-Graph enables root cause traversal. Situation Analyzer decides. Governed activation executes. Agent remediates autonomously. |

Result with UCL: RCA same-day. OTIF 87%→96%. Autonomous remediation within policy guardrails.

5.3 Major Incident Intercept (IT Ops)

Scenario: Servers spike latency at 2am. Need to: correlate with changes, score blast radius, decide remediation, execute, capture evidence.

Approach | Where It Breaks |

MCP | Connects monitoring, CMDB. No automatic correlation. Human detects. |

ADK | Compiles for one analysis. No ongoing scoring. No governed execution. |

ACE | Learns patterns without blast-radius scoring against current topology. |

Context Graphs | Stores dependencies. What correlates? What scores? What executes? |

UCL | Meta-Graph correlates change→incident. Situation Analyzer scores blast radius, decides rollback. Governed activation executes. Agent resolves before war room forms. |

Result with UCL: MTTR minutes, not hours. War room never forms. Autonomous incident resolution.

5.4 The Capability Gap

The use cases above reveal a consistent pattern: alternative approaches each deliver partial capabilities, but none delivers the complete system required for truly autonomous agents. The seven enterprise-class dimensions from Section 3 provide an objective framework for assessment.

[GRAPHIC 9: Enterprise Context Engineering Capability Matrix — Where architectural approaches stand on production-grade AI capabilities, showing MCP, ADK, ACE, Context Graphs, and UCL evaluated across all seven dimensions]

The matrix makes the gap visible:

MCP provides connectivity but nothing else—agents can reach data but cannot reason over it, decide, or act safely.

ADK adds compilation discipline and partial governance, but lacks process fusion, situation analysis, and governed activation—agents recommend but don't act.

ACE enables learning but without an enterprise substrate to learn against—agents improve in isolation, disconnected from enterprise reality.

Context Graphs provide the data structure but no consumption, mutation, or activation systems—data exists but agents can't use it.

UCL delivers all seven dimensions—the only architecture enabling agents that reason, decide, act, and learn.

The key insight: everyone has pieces. Only UCL has the connected system that compounds. This is not a matter of features to be added incrementally—the gap is architectural. Partial solutions cannot be patched into complete ones because they lack the foundational substrate.

Approach | Provides | Missing | Agent Autonomy |

MCP | Connection | Fusion, semantics, situation analysis, activation | None—escalates to human |

ADK | Compilation | Process fusion, decisions, activation, evidence | Partial—recommends, doesn't act |

ACE | Learning | Enterprise substrate to learn against | Isolated—learns but ungrounded |

Context Graphs | Data structure | Consumption, mutation, activation | None—data exists, can't be used |

UCL | All | — | Full—reasons, decides, acts, learns |

These use cases and the capability matrix demonstrate the core thesis: enterprise-class context engineering isn't just about better context—it's about enabling agents that can truly operate autonomously within enterprise governance.

6. Why This Matters Now

The Stakes

The numbers are stark:

42% of companies abandoned most AI initiatives in 2025 (S&P Global Market Intelligence, March 2025)—up from 17% one year prior

87% of enterprise RAG deployments fail to meet ROI expectations (Fini Labs analysis of 50+ Fortune 2000 deployments, July 2025)

68% of deployed agents execute ≤10 steps before requiring human intervention (UC Berkeley, arXiv:2512.04123, December 2025)

63% of enterprises cite output inaccuracy as their top Gen-AI risk (McKinsey Global Survey, May 2024)

The Diagnosis

These are context failures, not model failures. Foundation models have reached remarkable capability—and have largely commoditized. The differentiation has shifted from model selection to context architecture.

The enterprises failing at AI aren't choosing the wrong models. They're treating context as an afterthought: fragmented across systems, ungoverned, assembled ad-hoc for each use case. Every new copilot starts from zero. Nothing compounds. Agents can't operate autonomously because they lack the substrate to reason over.

The Timing

The window for building context infrastructure is now:

Gartner projects 33% of enterprise software will include agentic AI by 2028, up from less than 1% in 2024

But warns over 40% of agentic AI projects will be canceled by the end of 2027 due to escalating costs, unclear business value, or inadequate risk controls

The compounding dynamic means early movers accumulate advantages that late entrants cannot close

Accumulated semantic graphs create a compounding moat. Every workflow enriches the substrate. Every resolution pattern feeds future decisions. Every autonomous action adds intelligence. The enterprises that build this infrastructure now will have truly autonomous agents while competitors are still scripting copilots.

The Choice

Enterprises face a binary choice:

Treat context as afterthought — Fragmented signals, ad-hoc assembly, ungoverned activation. Agents that escalate everything to humans. Join the 42% abandoning AI initiatives.

Treat context as governed infrastructure — Enterprise signals unified, agents that reason and decide, activation that closes the loop with evidence. Truly autonomous agents operating within enterprise governance. Capture disproportionate value as context compounds.

The architecture exists. The question is who builds the substrate first.

[GRAPHIC 8: Context Failure Modes — Why 95% of Gen-AI Pilots Fail: Six failure types with mechanisms and UCL prevention strategies]

Conclusion

Context Engineering has emerged as the discipline separating successful enterprise AI from the 87% that fail. As foundation models commoditize, the differentiator is no longer which model you choose—it's whether you have the context infrastructure to make any model effective and to enable truly autonomous agents.

Context Graphs represent a crucial insight: AI needs structured representations of enterprise knowledge to reason, not just retrieve. But a data structure alone doesn't solve the hard problems:

Agent decision-making requires consumption structures that traverse graphs to analyze and decide

Agent production-ization requires mutation operations that write back learnings to compound intelligence

Enterprise synergy requires integration with existing ERP, EDW, process mining, ITSM investments

True autonomy requires governed activation that lets agents act safely within policy guardrails

UCL is the leading architecture that operationalizes Context Graphs into enterprise-class context engineering. Through eight co-equal patterns—with Pattern 8 as THE GATEWAY—UCL provides:

Existing enterprise investments unified as governed context sources

Accumulated semantic graphs fed by structure AND intelligence

Two compounding loops: smarter within each run, smarter across runs

Governed activation that closes the loop with rollback and evidence

Truly autonomous agents that reason, decide, act, and learn

The outcomes are measurable: DPO ↓11 days, OTIF 87%→96%, MTTR in minutes. Not insights that stop at dashboards—not agents that escalate to humans—but autonomous agents taking governed action with evidence.

The stakes are existential. The timing is now. The architecture is ready.

Context Graphs are necessary. UCL makes them work. That's how agents become truly autonomous.

References

Academic Research

Mei, L. et al. (2025). "A Survey of Context Engineering for Large Language Models." arXiv:2507.13334.

Zhang, Q. et al. (2025). "Agentic Context Engineering: Evolving Contexts for Self-Improving Language Models." arXiv:2510.04618.

Pan, M.Z. et al. (2025). "Measuring Agents in Production." arXiv:2512.04123. UC Berkeley, December 2025.

Mialon, G. et al. (2023). "GAIA: A Benchmark for General AI Assistants." arXiv:2311.12983. Meta AI, Hugging Face, AutoGPT. November 2023; ICLR 2024.

Industry Architecture

Anthropic. "Model Context Protocol." November 2024; Linux Foundation Agentic AI Foundation, December 2025.

Google Developers Blog. "Agent Development Kit." December 2025.

Foundation Capital. "Context Graphs: AI's Trillion-Dollar Opportunity." December 2025.

Enterprise Analysis

S&P Global Market Intelligence. "AI Project Failure Rates Survey." Early 2025. Survey of 1,000+ respondents in North America and Europe. Via CIO Dive, "AI project failure rates are on the rise: report," March 14, 2025. https://www.ciodive.com/news/AI-project-fail-data-SPGlobal/742590/

McKinsey & Company. "The state of AI in early 2024: Gen AI adoption spikes and starts to generate value." McKinsey Global Survey, 1,363 participants, May 30, 2024. https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-2024

Fini Labs. "Why Most RAG Applications Are Too Broad to Be Useful, And Why the Future Is RAGless." Analysis of 50+ Fortune 2000 RAG deployments, July 28, 2025. https://www.usefini.com/blog/why-most-rag-applications-are-too-broad-to-be-useful-and-why-the-future-is-ragless

Gartner, Inc. "Gartner Predicts Over 40% of Agentic AI Projects Will Be Canceled by End of 2027." Press Release, June 25, 2025. https://www.gartner.com/en/newsroom/press-releases/2025-06-25-gartner-predicts-over-40-percent-of-agentic-ai-projects-will-be-canceled-by-end-of-2027

Framework Documentation

Banerji, A. "Unified Context Layer (UCL): The Governed Context Substrate for Enterprise AI." dakshineshwari.net, December 2025.

Banerji, A. "AI Productization Report: Measuring Agents in Production." dakshineshwari.net, December 2025.

Banerji, A. "AI Productization: Scaling Agent Systems." dakshineshwari.net, December 2025.

Banerji, A. "Gen-AI ROI in a Box." dakshineshwari.net, January 2026.

Arindam Banerji, PhD

Comments