Unified Context Layer (UCL)The Governed Context Substrate for Enterprise AI

- Dec 19, 2025

- 36 min read

Updated: 49 minutes ago

Executive Summary

Enterprise AI fails at the last mile.

Dashboards proliferate but nobody trusts the numbers. ML models degrade for weeks before anyone notices. RAG systems hallucinate and ship without evaluation. Agents fragment context and write back without contracts. Process intelligence lives in silos, disconnected from ERP facts

and business semantics.

The common root cause: context is fragmented, ungoverned, and treated as a byproduct rather than a product.

UCL (Unified Context Layer) is the governed context substrate that addresses this. UCL treats context as a first-class product — versioned, evaluated, and promoted like code. It works with your existing stack (Snowflake, Databricks, Fabric, Power BI, dbt, AtScale) without rip-and-replace.

UCL Enables; Copilots Act

A critical distinction: UCL is a substrate, not an application. UCL provides governed context and activation infrastructure. Agentic copilots sit on top of UCL and implement reasoning, decisions, and action-ization. The copilot is the actor; UCL is the stage, the governed context, and the safety net.

Six Paradigm Shifts

1. Context becomes a governed product. Context Packs are versioned, evaluated (answerable@k, cite@k, faithfulness), and promoted through CI/CD gates. Bad context doesn't reach production.

2. Heterogeneous data sources unify. Process intelligence (Celonis, Signavio), ERP data (SAP), web-scraped data, EDW/database data, and feature stores are not separate beasts. They come together through a common semantic layer; their metadata comes together through the Meta-Graph.

3. Metadata becomes a reasoning substrate. The Meta-Graph is a Knowledge Graph containing entities, KPIs, contracts, lineage, and usage. LLMs can traverse, query, and reason over enterprise relationships — not just retrieve documents.

4. One substrate serves all consumption models. BI, ML, RAG, and Agent situational frames share the same contracts, semantics, and context. No semantic forks. Works with existing tools.

5. Activation closes the governed loop. Reverse-ETL is not a separate pipe — it's the completion of the substrate. Pre-write validation, separation of duties, rollback capability, and evidence capture.

6. The Situation Analyzer enables autonomous action. Shifts 1–5 build the substrate; Shift 6 activates it. Without UCL, agents follow hardcoded scripts in workflows. With UCL, situation analysis enables agents to understand context, decide what to do, and act through governed channels — transforming copilots from script-followers into situation-responders.

What UCL Delivers (Customer Value)

Value | What It Means |

One KPI truth | End disputes — same contracted definition across dashboards, ML, RAG, and agents |

Grounded context | Block hallucinations — Context Packs evaluated and promoted like code |

Autonomous copilots | Situation analysis enables agents to decide what to do, not follow scripts |

Closed-loop activation | Insights and analysis flow back to enterprise systems — governed, reversible, audit-ready |

Process-tech fusion | ERP + process signals joined in semantic layer — same-day RCA |

Meta-Graph reasoning | LLMs traverse entities, KPIs, lineage — blast-radius scoring before deploy |

Eight Architectural Patterns

UCL implements eight co-equal patterns that together form the substrate:

Multi-Pattern Ingestion — Batch, streaming, CDC, event-driven; process mining connectors

Connected DataOps & Observability — Contracts-as-code, governance-as-code, fail-closed gates, auto-rollback

StorageOps Across Categories — Works with warehouses, lakehouses, vector stores, feature stores

Common Semantic Layer — One KPI truth; Dashboard Studio; hot-set cache; anti-forking

Meta-Graph — Knowledge Graph for enterprise metadata; LLM-traversable; blast-radius scoring (for LLM reasoning over metadata)

Process-Tech Fusion — Celonis/Signavio signals joined with ERP facts under shared contracts (for human analysis via semantic layer)

Multiple Consumption Structures — S1 (BI), S2 (ML), S3 (RAG), S4 (Agent Frames), Activation

Situation Analyzer — THE GATEWAY between agents and substrate; understands context, scores situations, enables autonomous action

The Problem: Last-Mile Failures Across the Stack

Every layer of the modern data and AI stack suffers from last-mile failures — problems that emerge not from lack of capability, but from lack of governed context.

[INFOGRAPHIC 1: Last-Mile Failures Across BI / ML / RAG / Agents — NEW]

Traditional BI Last-Mile Failures

Dashboard sprawl without governance. Organizations accumulate hundreds of dashboards with no provenance, no retirement policy, and no single source of truth. Users create new dashboards because they don't trust existing ones.

The "two revenues" problem. Finance reports one revenue number; Sales reports another. Quarter-end becomes 2-3 days of reconciliation using tribal knowledge. Board packs are delayed. Executives lose trust in all numbers.

Poor mean-time-between-failures and silent data errors. When SAP changes a schema, everything downstream breaks — often silently. Data engineering teams spend enormous effort managing runbooks and repairing pipelines. Errors propagate before anyone notices.

Dashboards don't trigger action. The dashboard shows the problem. Then what? Humans swivel-chair between systems. Days pass. The bleed continues.

Traditional ML Last-Mile Failures

The business-technical accuracy gap. Model accuracy metrics (AUC, F1) don't translate to business outcomes. Research demonstrates that technical ML metrics frequently diverge from actual business value — a model optimized for accuracy may still cause revenue leakage because errors cluster in high-value segments.

Late drift detection. Distribution drift is caught at inference time — weeks after the problem started. By then, model degradation has already impacted customers and operations. Retraining takes days.

Training-serving skew. Features computed during training differ from features computed during serving due to different pipelines, timing, or data sources. Models perform well in development but fail in production.

Feature-KPI disconnect. Feature stores are built without lineage to business semantics. The "churn" feature in the ML pipeline may not match the "churn" KPI in the BI dashboard. S1 and S2 diverge.

Reverse-ETL chaos. Writing ML scores and predictions back to operational systems (CRM, ERP) causes schema drift, failed writes, and no rollback capability.

GenAI/RAG Last-Mile Failures

No evaluation gates. RAG pipelines ship to production without systematic evaluation. Hallucinated content reaches users. Legal and compliance exposure accumulates.

No KPI grounding. RAG answers contradict what dashboards show. The copilot says revenue is up; the dashboard says it's down. Users lose trust in both.

Industry evidence is stark. According to 2024-25 industry surveys: 30% of Gen-AI projects are abandoned due to quality issues, unclear value, and rising costs. 63% of enterprises cite output inaccuracy as their top risk. 70% cite data integration as the primary barrier to Gen-AI scale.

Agent Last-Mile Failures

Hardcoded action logic. Agents cannot decide what action to take. Action logic is embedded in workflows and orchestration code. Agents follow predetermined scripts rather than responding to situations. Without situation analysis, agents are not autonomous — they're just automated.

Context fragmentation. Each copilot builds its own context through separate RAG pipelines. The Buyer Copilot defines "supplier risk" differently than the Finance Copilot. Semantic fragmentation at scale.

No activation contracts. Agents write back to operational systems without schema validation, without idempotent keys, without separation of duties. Schema drift and failed writes accumulate.

No evidence trail. When something goes wrong, there's no audit chain connecting signal → context → decision → action → outcome. Compliance becomes impossible.

Industry evidence. Industry data suggests that the majority of AI projects fail to reach production, with significant delays for those that do. The failure is not model capability — it's context and governance.

Six Dimensions of Fragmented Context

The last-mile failures share a common root: fragmented, ungoverned context. This fragmentation manifests across six dimensions.

Dimension 1: Dashboard Entropy

The Problem. Dashboards accumulate without governance. Each team creates its own views because existing dashboards are untrusted or undiscoverable. No provenance tracks where metrics come from. No retirement policy removes stale dashboards.

The Cause. No common semantic layer enforces one truth. No lineage connects dashboards to source contracts. No usage tracking identifies candidates for retirement.

The Impact. Users spend time hunting for the "right" dashboard. Multiple dashboards show conflicting numbers. New dashboards are created to "fix" the old ones, accelerating entropy.

UCL's Solution. The common semantic layer ensures one KPI definition serves all dashboards. Dashboard Studio (natural language → KPI specification → governed visualization) generates visualizations from governed semantic specs. The Meta-Graph tracks lineage and usage, enabling provenance and retirement. Prompt-based analysis reduces the need for bespoke dashboards.

Dimension 2: Semantic Divergence ("Two Revenues")

The Problem. Finance defines "revenue" one way; Sales defines it another; Operations uses a third. When leadership asks for the revenue number, teams spend days reconciling before producing a defensible answer.

The Cause. No contracts-as-code enforce consistent KPI definitions. Each system implements its own logic. Joins, filters, and aggregations diverge across pipelines.

The Impact. Quarter-end chaos. Board packs delayed. Executive trust eroded. Tribal knowledge required to reconcile.

UCL's Solution. Contracts-as-code using ODCS (Open Data Contract Standard) YAML specifications define KPIs once. The semantic layer enforces one definition across BI, ML, RAG, and agents. The Meta-Graph tracks which assets use which contract. Divergence becomes detectable and preventable.

Dimension 3: Quarter-End Chaos

The Problem. Quarter-end close requires manual reconciliation across systems. Data engineering teams scramble to fix broken pipelines. Finance teams validate numbers using spreadsheets and tribal knowledge. The process takes days.

The Cause. No continuous validation catches issues before quarter-end. No fail-closed gates block bad data from propagating. No observability surfaces problems early.

The Impact. Delayed reporting. Audit risk. Executive distrust. Unsustainable workload on data teams.

UCL's Solution. Connected DataOps provides continuous validation — freshness, drift, and anomaly monitoring. Fail-closed gates block bad data at runtime. Auto-rollback restores last-known-good state. The system is always audit-ready, not just quarter-end ready.

Dimension 4: Prompt Inconsistency

The Problem. Different users asking similar questions get different answers. The analyst's natural language query returns one number; the executive's query returns another. The copilot contradicts the dashboard.

The Cause. No governed Prompt Hub ensures consistent query resolution. Each interface (Power BI Copilot, LakehouseIQ, custom RAG) resolves queries independently.

The Impact. Users lose trust in NL interfaces. Copilot adoption stalls. Shadow analytics persist.

UCL's Solution. The Prompt Hub and Prompt Catalog provide curated queries tied to the semantic layer. The same NL question resolves to the same governed KPI regardless of interface. Dashboard Studio and copilot experiences share the same semantic foundation.

Dimension 5: Agent Context Fragmentation

The Problem. Agentic copilots build their own context. The Buyer Copilot retrieves supplier information through one RAG pipeline. The Finance Copilot retrieves the same information through another. Definitions diverge. Situational frames conflict. Action logic is hardcoded in workflows — agents follow scripts, not situations.

The Cause. No shared semantic layer for agent context. No governed Context Packs provide consistent situational frames. No situation analysis enables agents to understand context and decide what to do. Each copilot is a semantic island with predetermined action paths.

The Impact. Agents give conflicting recommendations. Cross-copilot workflows fail. Enterprise-wide agent orchestration is impossible. Agents cannot adapt to situations — they can only execute what was scripted.

UCL's Solution. Context Packs provide governed situational frames assembled from the semantic layer and Meta-Graph. Situation analysis enables agents to understand context and decide appropriate actions — not just execute hardcoded steps. All copilots consume the same governed context. S4 (Agent Frames) shares the same substrate as S1 (BI), S2 (ML), and S3 (RAG).

Dimension 6: The Signal-Action Disconnect

The Problem. Dashboards detect signals but don't trigger action. A churn risk appears on the dashboard. Then what? Humans interpret, decide, and act manually. The action isn't traced. The outcome isn't measured.

The Cause. No activation layer connects context to operational systems. No evidence ledger traces signal → context → decision → action → outcome.

The Impact. Dashboards become expensive entertainment. Signals are detected; problems continue. The ROI of analytics is questioned.

UCL's Solution. Governed activation (Reverse-ETL with contracts) provides the infrastructure for copilots to act on signals. Pre-write validation, separation of duties, and rollback capability ensure safe writes. The Evidence Ledger captures the complete chain. UCL provides the substrate; copilots implement the action-ization.

[INFOGRAPHIC 2: The Shift With UCL — Before/After — Slide 9]

UCL Substrate vs. Agentic Copilots

Understanding this distinction is fundamental to understanding UCL's role.

[INFOGRAPHIC 3: Agentic Systems Need UCL — One Substrate for Many Copilots — Slide 13]

The Layer Model

UCL operates as a substrate layer between data platforms and agentic applications:

Top Layer: Agentic Copilots — Implement reasoning, decisions, and action-ization

Middle Layer: UCL Substrate — Provides governed context and activation infrastructure

Bottom Layer: Data Platforms — Snowflake, Databricks, Fabric, SAP BTP

What UCL Provides

Capability | UCL Delivers |

Governed Context | Contracts-as-code, semantic layer, Context Packs |

Situational Frames | S4 serve port with driver ranking and process signals |

Intent Normalization | Typed-Intent Bus converts diverse agent intents into common schema |

Situation Analysis | Control Tower and Situation Mesh understand context, score situations, and enable decision-making — not just scoring, but the foundation for agents to decide what to do |

Reasoning Substrate | Meta-Graph for multi-hop traversal and blast-radius scoring |

Activation Infrastructure | Pre-write validation, separation of duties, rollback, evidence capture |

Evidence Trail | Evidence Ledger captures complete chain from signal to outcome |

What Agentic Copilots Provide (On Top of UCL)

Capability | Copilot Delivers |

Reasoning | Evaluate options, weigh trade-offs, plan actions using situational frames |

Decision-Making | Choose what action to take based on governed context and situation analysis |

Action-ization | Invoke UCL's activation layer to execute governed write-back |

Orchestration | Route signals to specialist copilots (Buyer, Finance, Supply Chain) |

The Relationship

UCL enables. Copilots act.

Without UCL, copilots reason over ungoverned context, follow hardcoded scripts, and write through ungoverned pipes. With UCL, copilots consume governed situational frames, receive situation analysis that enables autonomous decision-making, and execute through governed activation.

The copilot is the actor. UCL is the stage, the governed context, and the safety net.

What Agentic Systems Cannot Do Alone

Without UCL's substrate, agentic systems face fundamental limitations:

Cannot maintain one KPI truth — each copilot invents its own joins and definitions

Cannot decide what action to take — action logic is hardcoded in workflows; agents follow scripts, not situations

Cannot align freshness, lineage, drift, and divergence across sources

Cannot share a semantic substrate across BI, RAG, agents, features, and reverse-ETL

Cannot provide governed activation paths for system writes, escalations, or policy-bound steps

Cannot guarantee safe writes — no reversible actions or contract-verified context before acting

The Shift With UCL

With UCL's substrate:

One governed substrate unifies retrieval, grounding, semantics, KPIs, lineage, and context

Situation analysis enables agents to understand context and decide what action to take — not just execute hardcoded steps

Typed intents become normalized, testable, explainable, and aligned to KPI and semantic contracts

Situations are evaluated through drift signals, semantic joins, evidence packs, and KPI deltas

Routing uses governed policies — SoD, idempotency, write-safety, and reversibility — not just model heuristics

Agent actions become predictable, auditable, and rollback-ready with a full evidence trail

Key UCL Components for Agentic Systems

Typed-Intent Bus. Normalizes intents across different agents into a common schema. When a Buyer Copilot and a Finance Copilot both need supplier information, the Typed-Intent Bus ensures they're speaking the same language.

Control Tower & Situation Mesh. The Control Tower orchestrates and routes; the Situation Mesh scores situations using KPIs, context, and drift signals. Together they provide situation analysis — the infrastructure that enables agents to understand what's happening and decide what to do.

Evidence Ledger. Records every action for audit, compliance, and rollback. Captures who did what, when, why, with what context, and what happened as a result.

Without UCL, agents follow scripts. With UCL, situation analysis enables governed, autonomous action.

The Six Paradigm Shifts

UCL represents six fundamental shifts in how enterprises approach data, context, and AI. Shifts 1–5 build the governed substrate. Shift 6 activates it.

[INFOGRAPHIC 4: Six Paradigm Shifts — NEW]

Shift 1: Context Becomes a Governed Product

Traditional approach: Context is a byproduct. RAG pipelines retrieve whatever's available. Prompts are assembled ad-hoc. Quality is hoped for, not measured.

UCL approach: Context is a product. Context Packs are versioned, evaluated, and promoted like code.

What this means in practice:

Context Packs have versions, manifests, and release notes

Evaluation gates run in CI before promotion

Packs don't promote to production unless gates pass

Rollback to previous pack version is possible

Evidence captures which pack version served which request

Shift 2: Heterogeneous Data Sources Unify

Traditional approach: Process intelligence (Celonis, Signavio), ERP data (SAP), web-scraped data, EDW/database data, and feature stores are separate beasts. Each requires different integration patterns, different semantic models, different governance frameworks.

UCL approach: All data sources flow through the same substrate. They come together through the common semantic layer (for human analysis); their metadata comes together through the Meta-Graph (for LLM reasoning).

What this means in practice:

Celonis process variants join with SAP ERP facts under shared contracts

Web-scraped competitive data binds to the same entity definitions as internal data

Feature store features share lineage with BI KPIs

The Meta-Graph connects process signals, ERP tables, web sources, and ML features

Root cause analysis that previously required days of CSV joins happens same-day

Shift 3: Metadata Becomes a Reasoning Substrate

Traditional approach: Metadata lives in catalogs. Lineage is a visualization. Catalogs answer "what exists?" but not "what happens if I change this?"

UCL approach: All metadata is pushed into a Knowledge Graph (Meta-Graph). LLMs can traverse, query, and reason over enterprise relationships — enabling reasoning about context, not just retrieval of documents.

What this means in practice:

"What KPIs are affected if this source schema changes?" — answered by graph traversal

"Show me all Context Packs using data from this contract" — queryable

Blast-radius scoring before change promotion

Consistency checks across the enterprise model

Context Packs assembled by querying the graph, not just keyword retrieval

The Meta-Graph is flexible — it can incorporate process signals, Context Pack metadata, agent action history, and any other metadata the implementation requires. What you put into it varies by maturity and deployment choices.

From Reasoning to Discovery: The Meta-Graph as Cross-Graph Search Substrate

The Meta-Graph’s power goes beyond answering questions about what exists. When multiple semantic domains are structured on the same governed layer, the Meta-Graph becomes the substrate for a fundamentally different capability: cross-graph discovery.

Consider a production deployment where UCL governs not just one context graph but six semantic domains:

Domain | What It Captures | Example Entities |

Security Context | Assets, users, alerts, attack patterns | (:User)-[:ASSIGNED_TO]->(:Asset) |

Decision History | Decisions, reasoning, outcomes, confidence evolution | (:Decision)-[:TRIGGERED_EVOLUTION]->(:EvolutionEvent) |

Organizational | Reporting lines, teams, access policies, role changes | (:User)-[:REPORTS_TO]->(:User) |

Threat Intelligence | CVEs, IOCs, campaign TTPs, geo-risk scores | (:CVE)-[:EXPLOITS]->(:Service) |

Behavioral Baseline | Normal patterns per user/asset/time | (:User)-[:NORMAL_BEHAVIOR]->(:TimeProfile) |

Compliance & Policy | Regulatory requirements, retention rules, audit mandates | (:Policy)-[:REQUIRES]->(:Control) |

Each domain is valuable on its own. But the Meta-Graph connects them. And when you periodically run searches across domain boundaries — looking for relationships that no single graph contains — emergent discoveries appear.

Example: Threat-Contextualized Pattern Re-evaluation

The Decision History graph knows: “We’ve closed 127 Singapore logins as false positives (PAT-TRAVEL-001, confidence: 0.94).”

The Threat Intelligence graph knows: “Singapore IP range 103.15.x.x shows 340% increase in credential stuffing this month (source: CrowdStrike, confidence: HIGH).”

Neither graph alone raises a flag. But a cross-graph search discovers the intersection: our false positive calibration for Singapore may be dangerously miscalibrated given the current threat landscape. The system reduces PAT-TRAVEL-001 confidence from 0.94 to 0.79 and adds threat_intel_risk as a new scoring factor.

Example: Role-Change Sensitivity Discovery

The Organizational graph knows: “jsmith was promoted to CFO three weeks ago — access to M&A data, board compensation, strategic plans.”

The Decision History graph knows: “jsmith’s login alerts have been routinely auto-closed for the past 6 months.”

Cross-graph discovery: a user whose alerts have been systematically under-scrutinized now has access to the firm’s most sensitive data. The system creates a new pattern (PAT-ROLE-CHANGE-SENSITIVITY-001) that flags role changes for users with established auto-close histories.

Why This Matters for UCL

These cross-graph discoveries are only possible because UCL provides the governed substrate that structures all six domains under shared contracts, consistent entity definitions, and traversable Meta-Graph relationships. Without UCL:

• Each domain is a semantic island with its own entity definitions

• Cross-domain joins require brittle manual pipelines

• Discoveries that depend on shared entity identity (the same “jsmith” across Security Context and Organizational) are impossible without governed entity resolution

The Meta-Graph isn’t just a catalog. It’s the substrate that makes institutional intelligence compound across domains.

The mathematics are super-linear. Two domains give you 1 cross-graph discovery surface. Four domains give you 6. Six domains give you 15. The formula: n(n-1)/2. Each new domain UCL governs doesn’t just add value — it multiplies the discovery space for every existing domain.

[GRAPHIC: GM-02 — Cross-Graph Connections: Combinatorial Growth (2→4→6 domains)]

Formally, this mechanism is structurally analogous to cross-attention in transformers (Vaswani et al., 2017) — each graph domain “attends to” every other domain, computing entity-to-entity relevance scores and transferring high-relevance information. The total discovery potential grows as O(n² × t^γ) where γ is between 1 and 2 — super-linear in both graph coverage and time in operation. (For the complete mathematical derivation, see Cross-Graph Attention: Mathematical Foundation.)

Shift 4: One Substrate Serves All Consumption Models

Traditional approach: BI builds its semantic layer. ML builds its feature store. RAG builds its vector index. Agents build their own context. Each is a semantic island.

UCL approach: One substrate serves S1 (BI), S2 (ML Features), S3 (RAG/Context Packs), S4 (Agent Situational Frames), and Activation.

What this means in practice:

The "churn" KPI in the dashboard is the same "churn" in the ML feature is the same "churn" in the agent situational frame

Same contracts govern all consumption models

No semantic forks across consumption patterns

Works with existing tools: AtScale + Power BI Copilot, dbt + LakehouseIQ, Looker + custom copilots

Shift 5: Activation Closes the Governed Loop

Traditional approach: Reverse-ETL is a separate pipe. Data flows out to operational systems through tools disconnected from the context substrate. No contracts validate writes. No evidence traces outcomes.

UCL approach: Activation is the completion of the governed loop. The same substrate that provides context also governs the write-back. Insights and analysis flow back to the enterprise systems that run the business — governed, reversible, audit-ready.

What this means in practice:

Pre-write validation confirms schema authority and idempotent keys

Separation of duties applies approval workflows

Rollback capability ensures any action can be reversed

Evidence Ledger captures signal → context → (copilot decision) → action → outcome

UCL provides the activation infrastructure; copilots invoke it

Shift 6: The Situation Analyzer Enables Autonomous Action

Traditional approach: Dashboards stop at "what's wrong." Humans interpret signals, swivel-chair between systems, and manually execute actions. Decision cycles take 40 hours. No systematic connection between insight and action. And when agents exist, their action logic is hardcoded in workflows — they follow predetermined scripts rather than responding to situations.

UCL approach: The Situation Analyzer — enabled by UCL's substrate — performs situation analysis: it understands context, scores situations, dispatches typed intents, and triggers governed action. Without UCL, agents follow hardcoded scripts in workflows. With UCL, situation analysis enables agents to understand what's happening and decide what to do — truly autonomous action grounded in governed context.

Why Shift 6 requires Shifts 1–5:

Situation Analyzer Needs | UCL Provides (via Shifts 1–5) |

One KPI truth for scoring | Contracted semantics (Shifts 1, 4) |

Grounded context for reasoning | Evaluated Context Packs (Shift 1) |

Fresh, consistent signals | Drift gates + unified sources (Shifts 2, 4) |

Safe activation paths | Governed Reverse-ETL (Shift 5) |

Audit trail for compliance | Evidence Ledger (Shift 5) |

What this means in practice:

Typed-Intent Bus normalizes signals into schema-validated intents (<150ms trigger SLA)

Control Tower routes to specialist copilots based on situation analysis

Pre-action checks validate policy, budget, and separation of duties before any write

Agents decide what action to take based on situation context — not hardcoded workflow logic

Decision loops compress from 40 hours to 90 seconds (~1,600× speed-up)

End-to-end: 2.1s P95 latency, 99.9% reliability, 7-year audit trail

The culmination: Without UCL (Shifts 1–5), the Situation Analyzer has no governed foundation — no consistent KPIs to score against, no evaluated context to reason with, no safe paths to act through. Without situation analysis, agents follow hardcoded scripts. With UCL, situation analysis enables governed, autonomous action. Dashboards transform from passive displays into autonomous decision systems. Copilots transform from script-followers into situation-responders.

Beyond the Six Shifts: UCL as the Foundation for Compounding Intelligence

Shifts 1 through 6 describe what UCL enables today: governed context, unified semantics, metadata reasoning, multi-consumer substrate, safe activation, and autonomous action. But there is a seventh shift that emerges from the first six — one that changes the competitive dynamics of enterprise AI.

Shift 7: The Substrate That Makes Intelligence Compound

Traditional AI deployments start smart and stay smart. They don’t get smarter. Every new model deployment starts from scratch. Every new copilot builds its own context. Intelligence doesn’t accumulate.

UCL changes this because it provides the governed substrate where accumulated intelligence persists and compounds. Specifically:

Decisions write back to the graph. When a copilot processes an alert and closes it as a false positive, that decision doesn’t just get logged — it creates a [:TRIGGERED_EVOLUTION] relationship in the context graph, depositing what the system learned. The next time a similar alert arrives, the system starts with that knowledge.

Patterns emerge from accumulated decisions. After 340+ decisions, the scoring weights have calibrated to reflect the firm’s actual risk profile. Week 1: 68% auto-close accuracy. Week 4: 89%. Same model. No retraining. The improvement comes entirely from accumulated context in the UCL-governed graph.

Cross-graph discoveries compound on top of decisions. The cross-graph search mechanism (described in the Shift 3 extension above) runs periodically, discovering relationships that no single domain contains. Each discovery feeds back into the graph, enriching future decisions. The Singapore threat recalibration doesn’t just fix one pattern — it creates a new scoring factor that improves every future decision involving geo-risk.

The Four Clocks of Enterprise Intelligence

This compounding operates across four measurable dimensions — what we call the Four Clocks:

Clock | Question It Answers | UCL’s Role | What It Enables |

State Clock | What’s true now? | Shift 4: one substrate, consistent semantics | Lookup — every system has this |

Event Clock | What happened? | Shift 5: activation evidence, decision traces | History — context graphs provide this |

Decision Clock | How did reasoning evolve? | Shift 6: situation analysis traces, weight evolution | Compounding — this is where most implementations stop |

Insight Clock | What has the system learned about this firm? | Shift 3 (extended): cross-graph discovery via Meta-Graph | Institutional intelligence — this is the frontier |

Most enterprise AI implementations run Clock 1. Sophisticated ones reach Clock 2. The implementations that will create permanent competitive advantages need all four — and UCL is the substrate that makes Clocks 3 and 4 possible.

[GRAPHIC: FC-01 — Four Clocks Progression Diagram]

The New Employee Analogy

The simplest way to explain this to a non-technical stakeholder:

When you hire a new analyst, you don’t expect maximal productivity on day one. You invest in their ramp. Month three, they know which alerts are noise. Month six, they’re fast — not because of new skills, but because they absorbed the firm’s context. Its traffic patterns. Its false positive signatures. Its organizational quirks. Things that live in the hallways, not the handbook.

A system built on UCL does the same thing. It learns the firm’s patterns, its exceptions, its organizational quirks. And unlike the employee, it retains access to accumulated knowledge, never leaves, and every new instance starts with everything every previous instance learned.

The Year 2 analyst’s cross-domain intuition? That’s the Insight Clock — the cross-graph search engine discovering relationships across all six semantic domains, overnight, at scale. And it’s only possible because UCL provides the governed substrate where all six domains share entity definitions, consistent contracts, and a traversable Meta-Graph.

Why This Is UCL’s Deepest Value

UCL’s immediate value is governance: one KPI truth, grounded context, safe activation. That’s real and measurable.

But UCL’s strategic value is that it’s the only substrate architecture that makes intelligence compound across time, across decisions, and across domains. Without UCL:

• Decisions can’t write back to a governed graph (no compounding)

• Cross-graph search can’t run across semantically consistent domains (no discovery)

• New copilots can’t inherit accumulated intelligence (no day-one advantage)

With UCL, every decision makes the next decision smarter. Every month of operation widens the gap between this system and any competitor that would need to start from scratch.

The moat isn’t the model. The moat is the graph. And the graph is governed by UCL.

(For the full mathematical framework — formal equations, worked examples, and 36-month simulation validation — see Compounding Intelligence: How Enterprise AI Develops Self-Improving Judgment. For the formal derivation of O(n² × t^γ) super-linear growth, see Cross-Graph Attention: Mathematical Foundation.)

The Seven-Layer Architecture

UCL implements as a seven-layer stack that works with existing platforms. The architecture supports all eight patterns through a consistent layer model.

[INFOGRAPHIC 5: Seven-Layer Stack — Snowflake Instantiation — Slide 7]

[INFOGRAPHIC 6: Seven-Layer Stack — Databricks Instantiation — Slide 8]

The Layer Model

Layer | Name | Function |

7 | Context & Consumption | Prompt Hub, Context Pack Compiler, Retrieval Orchestrator/KG, Evidence Ledger, Reverse-ETL Writer |

6 | Data Contracts & Quality Gates | ODCS YAML contracts, CI gates, prompt-template contracts, write contracts, SLA monitors |

5 | Governance, Observability & Meta-Graph | Policies, access history, resource monitors, Meta-Graph index, memory governance |

4 | Semantic Layer & Dashboard Studio | Metrics, materialized/dynamic views, KPI Library, Prompt Catalog, Dashboard Studio |

3 | Orchestration & Connected DataOps | Workflows, orchestrator bridges, contract-enforcement procedures, notifications |

2 | Transform & Model | dbt models, SQL, data quality tests (Great Expectations), curated schemas, feature pipelines |

1 | Ingestion & Storage-Ops | Streaming, batch, CDC (change data capture) connectors, external tables, storage optimization |

Three Component Types

Base — Works anywhere: dbt, Fivetran, Great Expectations, ODCS YAML

Native — Platform-specific: Snowpipe (Snowflake), Delta Live Tables (Databricks), Unity Catalog (Databricks), Streams & Tasks (Snowflake)

UCL Extension — The differentiators: Context Pack Compiler, Retrieval Orchestrator/KG, Evidence Ledger, Dashboard Studio, Meta-Graph index

No Rip-and-Replace

UCL works WITH existing platform investments:

Organizations using Databricks continue using Databricks; UCL adds contracts, context packs, and governed activation

Organizations using Power BI continue using Power BI; UCL adds governed semantics and evidence capture

Organizations using dbt continue using dbt; UCL adds observability, context engineering, and activation

UCL extensions add governance, context engineering, and activation capabilities without replacing the underlying stack.

The Four-Plane Architecture: Pattern 8 as the Agentic Gateway

The seven-layer stack can also be understood through a complementary lens: four functional planes that organize the eight patterns by their role in the agent-substrate relationship.

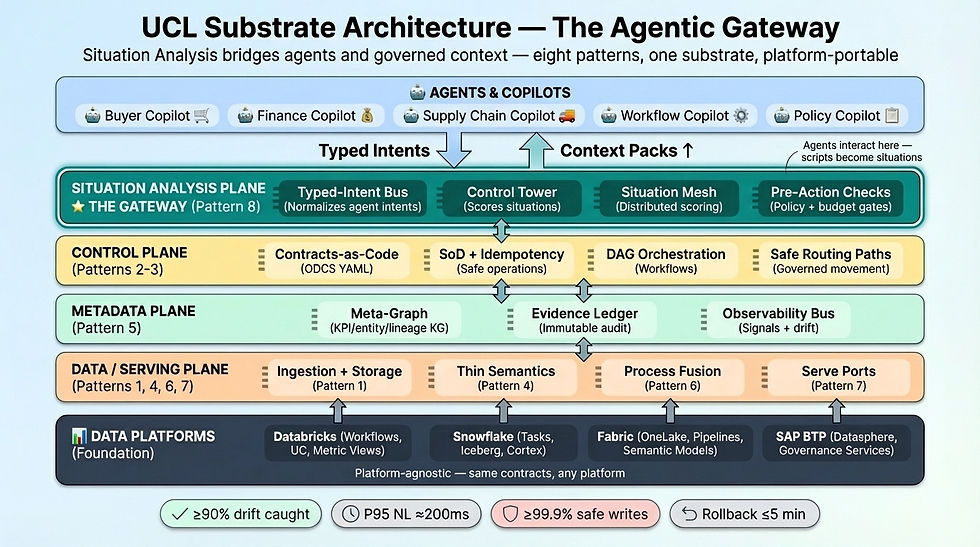

[INFOGRAPHIC 20: UCL Substrate Architecture — The Agentic Gateway — NEW]

Pattern 8 Is THE GATEWAY

Pattern 8 — the Situation Analyzer — is not just another pattern. It is THE GATEWAY between agentic copilots and the governed substrate. Every agent interaction flows through this plane:

Typed intents flow DOWN — Agents send normalized intents through the Situation Analysis Plane

Context Packs flow UP — Governed context returns through the same gateway

Without this gateway, agents interact directly with ungoverned data. With it, scripts become situations.

The Four Planes

Plane | Patterns | Function | Key Components |

Situation Analysis | Pattern 8 | THE GATEWAY — Agent entry point for all substrate interactions | Typed-Intent Bus, Control Tower, Situation Mesh, Pre-Action Checks |

Control | Patterns 2-3 | Governance enforcement and orchestration | Contracts-as-Code, SoD + Idempotency, DAG Orchestration, Safe Routing Paths |

Metadata | Pattern 5 | Enterprise knowledge for reasoning and impact analysis | Meta-Graph (KPI/Entity/Lineage), Evidence Ledger, Observability Bus |

Data / Serving | Patterns 1, 4, 6, 7 | Data operations, semantics, process fusion, and consumption | Ingestion, Thin Semantics + Context Packs, Process Fusion, Serve Ports (S1-S4) |

How the Planes Work Together

The Downward Flow (Typed Intents):

Agent sends intent → Situation Analysis Plane normalizes and scores

Control Tower routes → Control Plane validates contracts, SoD, policies

Query resolves → Metadata Plane provides entity/KPI context

Data assembles → Data/Serving Plane compiles Context Pack

The Upward Flow (Context Packs):

Context compiled → Data/Serving Plane assembles governed context

Grounding checked → Metadata Plane validates lineage and evidence

Policies applied → Control Plane enforces budgets and constraints

Pack delivered → Situation Analysis Plane returns to agent with situation scoring

Why This View Matters

The four-plane architecture makes explicit what the seven-layer stack implies:

Pattern 8 is the choke point. Every agent interaction — whether reading context or writing to activation — passes through the Situation Analysis Plane. This is where scripts become situations.

Patterns group by function, not layer. Patterns 2-3 (DataOps, StorageOps) both serve governance even though they span multiple layers. Pattern 5 (Meta-Graph) serves reasoning even though it touches layers 5-7.

The gateway enables substitution. Different copilot implementations can sit above the gateway — they all interact through the same Situation Analysis Plane. The substrate doesn't care which copilot is calling; it ensures governed context regardless.

The Eight Architectural Patterns

The seven-layer architecture implements eight co-equal patterns that together form the governed context substrate.

[INFOGRAPHIC 7: Operational Core — Governed ContextOps Engine — Slide 22]

[INFOGRAPHIC 8: Pillars and Stages — How UCL Operates End-to-End — Slide 10]

Pattern 1: Multi-Pattern Ingestion

UCL supports diverse ingestion patterns, all flowing through the same contract framework:

Batch — Traditional ETL/ELT loads via Fivetran, Airbyte, or native connectors

Streaming — Real-time event processing via Kafka, Kinesis, or native streaming (Snowpipe Streaming, Autoloader)

CDC — Change data capture from transactional systems

Process Mining — Celonis via Delta Sharing, Signavio via SAP BTP Event Mesh

Web/External — Scraped data, API feeds, partner data shares (e.g., Nasdaq via Delta Sharing)

Schema authority is established at ingestion. Freshness SLAs are set. Governance-as-code applies policies automatically.

Pattern 2: Connected DataOps & Observability

Contracts-as-Code. ODCS (Open Data Contract Standard) YAML specifications define KPIs, schemas, freshness requirements, and quality thresholds. Contracts are version-controlled in Git and enforced in CI and runtime.

Governance-as-Code. Policies for access control, PII masking, retention, and compliance are applied automatically. Policy changes propagate through the Meta-Graph.

Fail-Closed Gates. Runtime gates block data that violates contracts. Bad data doesn't propagate silently — the system fails closed rather than allowing contamination.

Auto-Rollback. When failures occur, the system restores last-known-good state automatically.

End-to-End Observability. Freshness monitoring, drift detection using PSI (Population Stability Index) and KS (Kolmogorov-Smirnov) tests, anomaly detection, and volume tracking. Stage-aware monitoring: S2 monitors feature drift; S3 monitors faithfulness; S4 monitors pre-action checks.

Pattern 3: StorageOps Across Categories

UCL works across diverse storage categories without mandating specific technologies:

Warehouses — Snowflake, Redshift, BigQuery

Lakehouses — Databricks (Delta Lake), Fabric OneLake

Vector Stores — For embedding-based retrieval in S3/RAG

Feature Stores — For ML feature serving in S2

External Tables — Iceberg, Delta Lake open formats for interoperability

Governance-as-code ensures policy enforcement is automated across storage categories. Configuration is platform-specific, but contracts and governance are portable.

Pattern 4: Common Semantic Layer

One KPI Truth. KPI definitions (YAML specs) are defined once and consumed by all surfaces — BI dashboards, ML features, RAG context, agent frames. The "revenue" in Finance is the same "revenue" everywhere.

Dashboard Studio. Natural language → KPI specification → governed visualization. Users describe what they need; Dashboard Studio generates governed dashboards from the semantic layer. This reduces dashboard sprawl by enabling prompt-based analysis.

Hot-Set Cache. Prioritized KPIs are cached for fast response. Modelled performance targets: P95 NL latency ≈ 200ms on hot sets.

Anti-Forking. Mechanisms prevent semantic divergence. Changes to KPI definitions propagate to all consumers. The Meta-Graph tracks which assets depend on which definitions, surfacing conflicts before they cause problems.

Pattern 5: The Meta-Graph

The Meta-Graph is a Knowledge Graph that makes enterprise metadata LLM-traversable — enabling reasoning about context, not just retrieval.

What the Meta-Graph Contains:

Node Type | Examples |

Entities | Customer, Product, Supplier, Order, Route |

KPIs | Revenue, Churn Rate, OTIF, Cycle Time |

Contracts | Schema specs, freshness SLAs, quality thresholds |

Lineage | Source → Transform → KPI → Dashboard/Feature/Pack |

Usage | Who consumed what, when, for what purpose |

Process Signals | Celonis variants, drivers, bottlenecks |

Context Packs | Pack manifests, evaluation results, versions |

What the Meta-Graph Enables:

Multi-hop reasoning: "What KPIs are affected if this source schema changes?" — answered by graph traversal

Blast-radius scoring: Before promoting a change, estimate downstream impact across dashboards, features, and Context Packs

Consistency checks: Detect when definitions diverge; surface conflicts before they cause problems

Graph-based context assembly: Context Packs assembled by traversing entity relationships, not just keyword retrieval

LLM manipulation: "Add drift gates to all features downstream of this source" becomes executable

Cross-graph discovery: When multiple semantic domains are governed on the same Meta-Graph, periodic cross-domain searches discover relationships that no single graph contains — emergent, firm-specific insights like “our Singapore false-positive calibration is dangerously miscalibrated given current threat intelligence.” The discovery space grows as n(n-1)/2 — quadratic in the number of connected domains. This is the mechanism that transforms the Meta-Graph from a reasoning substrate into a compounding intelligence substrate. (See Shift 3 extension and Shift 7 for the full framework.)

[GRAPHIC: CGA-01 — Three Levels of Cross-Graph Attention]

Pattern 6: Process-Tech Fusion

Process intelligence, ERP data, and other heterogeneous sources come together through UCL — unified in the semantic layer for human analysis and decision-making.

[INFOGRAPHIC 9: Process-Tech Fusion — Celonis & Signavio Into UCL — Slide 12]

The Integration Model:

Celonis → UCL: Celonis EMS exports process graph KPIs (cycle time, rework, variants) via Delta Sharing. UCL ingests process tables, binds to entity contracts, registers joins in the Meta-Graph.

Signavio → UCL: SAP Signavio Process AI exports throughput and variant KPIs via BTP (Business Technology Platform) Event Mesh. Native ingestion with auto-typing. UCL binds to contracts and validates joins.

What Fusion Enables:

Same-day RCA: Process variants and ERP facts are already joined under contracts. Root cause analysis happens same-day rather than requiring days of CSV exports and manual joins.

Governed Insight Cards: Process anomalies surface as Insight Cards with owner, scope, verify, and rollback capabilities.

Process-aware situational frames: Agent situational frames include process signals — which variants are active, which drivers are trending, what the process state is.

Pattern 7: Multiple Consumption Structures

UCL serves multiple consumption patterns through the Serve-Port model, working with existing tools.

[INFOGRAPHIC 10: Serve-Port Architecture — One Substrate, Four Consumption Models — Slide 6]

[INFOGRAPHIC 11: Consumption Models — One Substrate, Five Ways to Consume — Slide 11]

The Five Serve Ports:

Port | Name | Function | Modelled Outcomes* |

S1 | Cubes/Views (BI) | Metric Views, hot-set cache, prompt-driven dashboards, NL Q&A | 3-4× authoring throughput; bespoke dashboards ↓30-40% |

S2 | Feature Tables (ML) | Delta/Dynamic tables, freshness windows, drift/skew monitors | Drift caught at ingestion; training/serving skew within caps |

S3 | Gen-AI & RAG | Context Pack Compiler, KG hooks, evaluation harness | Faithfulness ≥95%; no un-evaluated packs in production |

S4 | Planner Context (Agents) | Typed intents, situation analysis, Evidence Ledger | Safe, reversible actions; ≥99.9% compliant writes |

Activation | Reverse ETL | Idempotent write-back, pre-write validators, rollback | Closed-loop verification; rollback ≤5 min |

*Modelled outcomes based on architecture design targets and industry benchmarks.

Works With Existing Tools:

The Serve-Port model integrates with existing tool investments:

AtScale + Power BI Copilot → S1 with governed semantics

dbt + LakehouseIQ → S1 with prompt-native BI

Looker + custom copilots → S1/S3 with shared semantic foundation

MLflow + Databricks Feature Store → S2 with contract-governed features

UCL doesn't mandate specific tools. It provides the substrate that makes any combination governed.

Pattern 8: The Situation Analyzer — The Agentic Gateway

The Situation Analyzer is the capstone pattern — it transforms served context into governed, autonomous action. More than that: Pattern 8 is THE GATEWAY between agentic copilots and the governed substrate.

The Gateway Role:

Every agent interaction flows through the Situation Analysis Plane. Typed intents descend to be scored and governed; Context Packs ascend to provide grounded, evaluated context. Without this gateway, agents interact directly with ungoverned data. With it, scripts become situations.

What the Situation Analyzer Does:

Function | Description |

Understands context | Consumes Context Packs, KPI signals, and process state |

Scores situations | Evaluates against drift, freshness, and policy constraints via Situation Mesh |

Enables decisions | Provides the foundation for copilots to decide what action to take |

Triggers governed action | Dispatches typed intents through the Typed-Intent Bus to activation |

Why It's an Architectural Pattern:

Without the Situation Analyzer, UCL serves context but doesn't close the loop. Copilots receive Context Packs but still follow hardcoded scripts. With the Situation Analyzer:

Agents respond to situations, not scripts

Actions are grounded in scored, governed context

The complete chain from signal to governed action is enabled

Copilots transform from script-followers into situation-responders

Key Components:

Component | Role |

Typed-Intent Bus | Normalizes intents across agents into common schema |

Control Tower | Orchestrates and routes based on situation analysis |

Situation Mesh | Scores situations using KPIs and drift signals |

Pre-Action Checks | Validates policy, budget, SoD before any write |

The Architectural Sequence:

Patterns 1-7 build the governed substrate: data lands (Ingestion) → is governed (DataOps) → is stored (StorageOps) → gets meaning (Semantic Layer) → metadata enables reasoning (Meta-Graph) → process signals join (Process Fusion) → context is served (Consumption).

Pattern 8 completes the chain: context is analyzed → situations are scored → autonomous action happens through governed channels.

The Gateway Visualization:

The four-plane view (see "The Four-Plane Architecture" section) makes Pattern 8's gateway role explicit. The Situation Analysis Plane sits at the top — every agent interaction passes through it. Typed intents flow down; Context Packs flow up. This is where scripts become situations.

ContextOps: The Production Lifecycle

Context Packs move through a governed lifecycle — designed, compiled, evaluated, and served under explicit contracts and budgets.

[INFOGRAPHIC 12: Governed ContextOps — Design → Compile → Evaluate → Serve — Slide 15]

Design & Compile

Ordering & Compression. Normalize, rank, and compress retrieval inputs. Apply ordering functions (position → citation quality → correctness). Emit compact, ranked context bundles sized for downstream consumption.

Drivers & KPI Binding. Bind drivers and variants to governed KPI Views via Meta-Graph traversal. Ensure standard join paths are intact. Align context with S1 KPI contracts so RAG and agent context matches dashboard truth.

Grounding & Citations. Produce outputs with explicit citation maps and source traces. Validate joins, references, and attribution before evaluation. Write grounding metadata to Evidence Ledger.

Knowledge Graph Packs. Build KG-aware bundles with entities, relations, and consistency checks. Validate graph-level constraints. Detect divergence or missing edges. Apply compression tuned for graph traversal.

Evaluate & Serve

Evaluation Metrics:

Metric | What It Measures |

answerable@k | Does the context contain information needed to answer the query? |

cite@k | Can claims be traced to source documents within the context? |

faithfulness | Does generated content accurately reflect the source context? |

miss@k | What relevant information is missing from the context? |

latency budget | Does context assembly meet response time targets? |

token budget | Does context fit within LLM context window constraints? |

Promotion Gates. Run evaluation harness in CI. Apply latency, cost, compression, and consistency budgets. Block promotion unless all contracts and budgets pass. Fail-closed by design.

Driver Ranking & Routing. Rank drivers and variants; choose tools and sources based on freshness and authority. Apply governance chips (separation of duties, idempotency, write-safety). Serve context and actions with reversible paths and attached evidence.

Governed Activation & Evidence

Activation is not a separate pipe. It's the completion of the governed loop — the same substrate that provides context also governs the write-back. Insights and analysis flow back to the enterprise systems that run the business.

Pre-Write Validation

Schema authority confirmed against target system contracts

Idempotent keys validated to prevent duplicate writes

Target system health and rate limits verified

Budget constraints checked

Separation of Duties

Approval workflows for sensitive writes

Role-based access to activation capabilities

Policy checks enforced before execution

Rollback Capability

Any write can be reversed (modelled target: ≤5 minutes)

Rollback recipe logged with who/what/when/before/after

Last-known-good state restorable

Evidence Ledger

The Evidence Ledger captures the complete chain:

Element | What's Captured |

Signal | What triggered the action (KPI breach, drift detection, agent intent) |

Context | Which Context Pack version, which sources, which contracts |

Decision | Copilot reasoning trace (what options considered, why this action) |

Action | What was written, to which system, when, by whom |

Outcome | Success/failure, downstream effects, any rollback |

This enables SOX, GDPR, and industry-specific compliance. Auditors can trace from any action back to its triggering signal through governed context.

A Day With and Without UCL

Abstract architecture becomes concrete through daily experience. These scenarios show how UCL transforms actual work.

[INFOGRAPHIC 13: Day in the Life (Finance) — With vs Without UCL — Slide 17]

Finance: Morning to Afternoon

Without UCL — Morning: GM% shows unexpected volatility. Joins are inconsistent, slowing the morning close. The team doesn't trust the numbers. KPI trust is low; the close is delayed.

With UCL — Morning: Contracted GM% specification with drift gates clarifies anomalies immediately. The KPI behavior is trusted because it's governed. The close stabilizes.

Without UCL — Noon: Conflicting drivers from different systems stall diagnosis. No grounding means no lineage for root cause analysis. Misattribution risk rises. Teams debate whose numbers are right.

With UCL — Noon: Context Pack provides grounded, cited explanations. No hallucinations because evaluation gates enforce faithfulness. Grounded answers enable fast resolution.

Without UCL — Afternoon: Unverified adjustments create rework and audit exposure. No evidence trail. High audit risk accumulates.

With UCL — Afternoon: Reverse-ETL with separation of duties ensures secure, reversible updates. Full evidence trail. Audit-ready operations.

[INFOGRAPHIC 14: Day in the Life (Operations) — With vs Without UCL — Slide 18]

Operations: Incident to Resolution

Without UCL — Morning (Incident Onset): Alerts fire with no consistency checks. Drift isn't caught early; noisy signals escalate. Engineers triage blind without lineage. Drift and noise increase.

With UCL — Morning: Drift gates catch divergence pre-consumer. Telemetry fused with lineage provides context. Healthy signals reduce false positives. Stability increases.

Without UCL — Noon (Triage): Conflicting metrics stall diagnosis. No grounding; conflicting drivers mislead root cause analysis. Ticket ping-pong increases mean time to resolution.

With UCL — Noon: Context Pack provides grounded RCA evidence. Drivers ranked; anomalies tied to known contracts. Triage accelerates with reversible routing chips. RCA speed increases.

Without UCL — Afternoon (Resolution): Fixes applied without reversible paths. High rework exposure after wrong updates. Ops risk grows; ML pipeline inconsistencies surface.

With UCL — Afternoon: Idempotent updates ensure safe writes. Rollback-ready paths reduce operational risk. Fixes validate cleanly across ML and BI pipelines. Rework decreases; safety increases.

Platform Instantiation

UCL instantiates on major platforms through the seven-layer architecture, with platform-specific native components and portable UCL extensions.

Snowflake Instantiation

Native Components: Snowpipe/Snowpipe Streaming (ingestion), Dynamic Tables and Materialized Views (semantic layer), Object Tags and Row/Column Policies (governance), Streams & Tasks (orchestration), Snowpark Feature Pipelines (ML)

UCL Extensions: Context Pack Compiler, Retrieval Orchestrator/KG, Evidence Ledger, Dashboard Studio, Meta-Graph index

Consumption: Powers S1→S4 + Activation. Power BI Copilot runs on governed semantics.

Databricks Instantiation

Native Components: Autoloader and Delta Live Tables (ingestion), Unity Catalog and Lakehouse monitoring (governance), Metric Views and Photon cache (semantic layer), Databricks Workflows (orchestration), MLflow feature notebooks (ML)

UCL Extensions: Context Pack Compiler, Retrieval Orchestrator/KG, Evidence Ledger, Dashboard Studio, Meta-Graph index

Consumption: Emits situational-analysis frames for external copilots. LakehouseIQ integration for prompt-native BI.

Portability

The same contracts, semantics, and Context Packs work across platforms. Organizations can:

Run Databricks for ML workloads and Snowflake for BI — same contracts govern both

Migrate between platforms without redefining KPIs

Operate multi-cloud estates without semantic drift

Industry Evidence: Proven Patterns

Individual UCL components have been proven at scale by industry leaders. UCL's differentiated value is unifying these proven patterns under one governed substrate.

Semantic Layer at Scale

TELUS deployed AtScale on BigQuery as a vendor-agnostic semantic layer for radio-network analytics, enabling consistent metrics across diverse BI tools. Skyscanner replaced legacy SSAS with AtScale on Databricks, achieving consistent measures at lakehouse speed. Wayfair operates a single Looker semantic model powering near real-time reporting across the organization.

Pattern proven: Universal metric definitions consumed by multiple tools eliminate the "n-truths" problem.

Data Contracts & Observability

JetBlue deployed Monte Carlo observability and achieved measurable lift in their internal "data NPS" — a trust metric for data reliability. Roche combines Monte Carlo with data mesh architecture, implementing domain-level reliability gates and AI pipeline checks. BlaBlaCar prevents downstream breakage with contract-style rules that block bad data before propagation.

Pattern proven: Contracts plus observability create trust and reduce silent data errors.

Process-Tech Fusion

Johnson & Johnson deployed Celonis and surfaced inefficiencies that reduced touch-time and revealed price-change leakage previously invisible in traditional reporting. Healthcare and manufacturing organizations across the Celonis portfolio report KPI improvements and near real-time operational triggers.

Pattern proven: Process signals combined with ERP facts accelerate RCA and enable action.

Knowledge Graphs for Enterprise Reasoning

Samsung acquired Oxford Semantic Technologies (2024) for on-device graph reasoning. ServiceNow acquired data.world (2025) for graph-based data catalogs. Microsoft's GraphRAG framework made graph-based retrieval accessible to developers.

Pattern proven: Knowledge graphs enable multi-hop reasoning that vector search alone cannot provide.

Cross-Graph Attention Formalism

The cross-graph discovery mechanism described in Shift 3 and Shift 7 has been formalized using the same computational form as transformer attention (Vaswani et al., 2017). At three levels — single-query scoring (analogous to scaled dot-product attention), domain-pair discovery (analogous to cross-attention), and full cross-graph search (analogous to multi-head attention) — the mathematical structure shows that discovery potential grows super-linearly: O(n² × t^γ) where n is graph coverage and t is time in operation.

Pattern proven: The mathematical correspondence between cross-graph discovery and attention mechanisms provides a formal, shape-checked foundation for the compounding moat claims. Complete derivation: Cross-Graph Attention: Mathematical Foundation.

Compounding Intelligence Validation

A parametric 36-month simulation (6 domains, 50 alerts/day, γ=1.5, 12-month competitor delay) validates the super-linear compounding: accuracy reaches 88% by Month 6, cross-graph discoveries grow quadratically, competitive gap at Month 24 is 75 (nearly 2× the competitor’s total), total ROI = $6.06M over 36 months. The SOC Copilot demo demonstrates Dimensions 1 and 2 (multi-factor scoring + weight evolution from 68% to 89%) in a working system, with architecture designed for Dimension 3 (cross-graph search).

Pattern proven: Runtime weight evolution and cross-graph discovery — the two mechanisms that make UCL-governed graphs compound — are validated in both simulation and working demo. See: Compounding Intelligence: How Enterprise AI Develops Self-Improving Judgment, SOC Copilot Demo.

The UCL Differentiator

Each pattern addresses one capability. UCL unifies all under one governed substrate:

Pattern | Proven By | UCL Adds |

Semantic Layer | TELUS, Skyscanner, Wayfair | Same layer serves BI AND ML AND agents AND activation |

Data Contracts | JetBlue, Roche, BlaBlaCar | Same contracts govern ingest AND transform AND serve AND write-back |

Process Fusion | J&J, Celonis portfolio | Same substrate fuses process AND ERP AND web AND features |

Knowledge Graph | Samsung, ServiceNow, Microsoft | Meta-Graph enables reasoning about context, not just retrieval |

Closed-Loop Activation | (UCL unique) | Signal → context → copilot → governed action → evidence |

The compound effect of unification exceeds the sum of individual implementations.

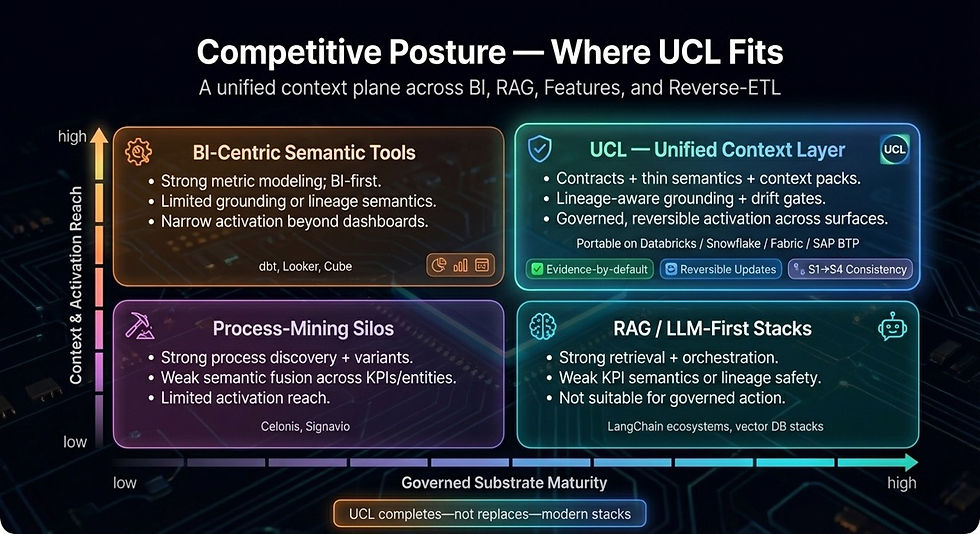

Competitive Position

[INFOGRAPHIC 16: Competitive Posture — Where UCL Fits — Slide 19]

The Landscape

BI-Centric Semantic Tools (dbt, Looker, Cube, AtScale)

Strong metric modeling within BI consumption

Limited context engineering for RAG/agents

No evaluated Context Packs

No governed activation layer

Process-Mining Platforms (Celonis, Signavio)

Deep process discovery, variant analysis, driver identification

Insights stop at reports and dashboards

No fusion with governed ERP semantics under shared contracts

No safe, reversible activation

RAG/LLM-First Stacks (LangChain, LlamaIndex, vector databases)

Strong retrieval orchestration and generation

Weak on enterprise contracts and KPI semantics

No lineage to business definitions

No governed write-back with evidence

UCL Position

High governed substrate maturity across all patterns

Full context and activation reach

Completes the stack rather than replacing it

UCL Differentiators

Differentiator | What It Means |

Source Unification | Process, ERP, web, EDW come together through semantic layer + Meta-Graph |

Meta-Graph | Metadata as KG enables LLM reasoning about context itself |

Evidence-by-Default | Every action traces to triggering signal through governed context |

Reversible Activation | Rollback capability; no ungoverned writes to operational systems |

S1→S4 Consistency | Same substrate for BI, ML, RAG, and agents — no semantic forks |

Situation Analysis | Enables agents to decide what to do — not follow hardcoded scripts |

The Gateway | Pattern 8 provides single entry point for all agent-substrate interactions |

Enables, Not Competes | Works with existing tools; extends rather than replaces |

[INFOGRAPHIC 15: Partner Ecosystem — We Complete Your Stack — Slide 21]

Scenario Summary: Signal to Evidence

[INFOGRAPHIC 17: Scenarios — Before vs With UCL — Slide 24]

Scenario | Signal | Context (UCL) | Copilot Reasoning | Activation (UCL) | Evidence |

End "Two Revenues" | KPI drift flagged | Contracted Metric View | — | — | Verified badge; disputes ↓* |

Customer Churn Response | Churn score (S2) | Customer situational frame | Evaluate offers, decide | Write offer to CRM | Signal → decision → action traced |

OTIF Recovery | OTIF drop + process variant | ERP + Celonis fusion | Identify root cause, recommend | Update carrier priority | Rollback ready; RCA same-day |

Price Variance Defense | Celonis anomaly | Supplier/commodity context | Evaluate impact, decide action | Adjust invoice/escalate | Reversible; auditable |

Grounded RAG | User query | KG-aware Context Pack | — | — | Faithfulness ≥95%*; citations |

Safe Reverse-ETL | Scores ready | Schema authority validated | — | Write to ERP/CRM | ≥99.9% success*; rollback ready |

Dashboard Sprawl Reduction | NL query | Prompt Hub + Semantic | — | — | Authoring throughput ↑; sprawl ↓ |

Agentic Workflow | Typed intent | Situational frame + process | Reason, plan, decide | Governed write-back | Full chain traced |

Cross-Platform Federation | Multi-platform signal | Same contracts across platforms | Platform-aware routing | Consistent activation | Portable; no semantic drift |

*Modelled outcomes based on architecture design targets.

The Shift: Summary

[INFOGRAPHIC 18: Technical Closing — What Changes Once UCL Is Deployed — Slide 25]

Dimension | Before UCL | After UCL |

Context Status | Byproduct of pipelines | Governed product with versions and evaluation |

Data Sources | Process, ERP, web, EDW as separate systems | Unified through semantic layer + Meta-Graph |

Metadata | Passive catalog for humans | Knowledge Graph for LLM reasoning |

Consumption Models | Separate semantics per model | One substrate serves S1-S4 + Activation |

Agent Entry Point | Direct, ungoverned access | Gateway via Situation Analysis Plane |

Agent Context | Ad-hoc RAG per copilot | Governed situational frames via situation analysis |

Agent Action Logic | Hardcoded in workflows; agents follow scripts | Situation analysis enables autonomous decisions |

Activation | Separate Reverse-ETL pipe | Completion of governed loop with evidence |

KPI Definitions | n-truths per system | One contract, many surfaces |

Dashboard Management | Sprawl accumulates without retirement | Prompt-based analysis; anti-sprawl controls |

Quarter-End | Manual reconciliation taking days | Continuous validation; audit-ready in hours |

Drift Detection | At inference, weeks late | At ingestion, immediate |

Process Signals | Siloed from ERP and finance | Fused under shared contracts in Meta-Graph |

Audit Trail | Broken or absent | Signal → context → action → evidence complete |

Tool Integration | Rip-and-replace required | Works with existing stack |

Quick Wins: 30-60 Day Value

[INFOGRAPHIC 19: Quick Wins — 30-60 Days — Slide 14]

Group 1: Governed KPI & BI (S1)

One-Source-of-Truth KPIs. Contract 8-12 core metrics with ODCS YAML. Deploy verified badges. Reduce KPI disputes. Increase executive trust.

Prompt-Native BI Starter. NL → KPI spec → governed dashboards via Dashboard Studio. Target: 3-4× authoring throughput improvement. P95 NL response ≈ 200ms on hot sets.

Thin Semantic Layer. Single KPI YAML spec shared across BI, APIs, RAG, features. Zero conflicting KPI definitions. Warm NL response ≈ 200ms.

Group 2: RAG & Context Engineering (S3)

Context Packs for RAG. Retrieval schema + compression + policy + citations. Evaluation gates (answerable@k, cite@k). Target: sub-second grounded answers with faithfulness ≥95%.

Group 3: ML Features & MLOps (S2)

Feature Store Hot-Start. 5-8 production features with freshness monitoring and drift gates. Lineage connection to BI KPIs. Drift alerts before model degradation.

MLOps Bootstrap. Registry + CI/CD + canary/blue-green serving. Inference logging. Feature/label-joined inference tables with drift monitors. Target: safe promotions, rollback ready.

Group 4: Governance & Reliability (S1→S4)

Contracts-as-Code Rollout. Schema/quality/freshness contracts in Git. CI merge-blocks. Runtime gates with auto-rollback to last-green pointer. Target: zero silent schema breaks; MTTR ≤ 30 min.

Observability & Auto-Rollback Pack. Freshness/volume/anomaly monitors with lineage. Incident dashboard with rollback hooks. Target: ≥90% failures caught pre-consumer.

Group 5: Process-Tech Fusion & Activation (S3/S4)

Process-Tech Fusion Lite. Telemetry landed next to ERP (OTIF/O2C). Driver variant ranking. First Insight Cards with owner/scope/verify/rollback. Target: RCA time reduction; rework reduction.

Activation Guardrails. Pre-write checks: idempotent keys, schema authority, separation of duties. Write-back receipts with verification ledger and rollback recipe. Target: ≥99.9% successful writes; zero schema-drift writes.

Conclusion

The Problem

Enterprise AI fails at the last mile. Dashboards proliferate without trust. ML models drift undetected for weeks. RAG systems hallucinate without evaluation gates. Agents fragment context, follow hardcoded scripts, and write without contracts. Process intelligence stays siloed from ERP and finance.

The root cause: context is fragmented, ungoverned, and treated as a byproduct rather than a product.

The Solution

UCL treats context as a governed product. Six paradigm shifts transform how enterprises approach data:

Context becomes versioned, evaluated, promoted — like code, not an afterthought

Heterogeneous sources unify — process, ERP, web, EDW through semantic layer + Meta-Graph

Metadata becomes LLM-traversable — the Meta-Graph enables reasoning about context, not just retrieval

One substrate serves all consumption — S1 through S4 plus Activation share semantics without forks

Activation closes the governed loop — insights flow back to enterprise systems, governed and reversible

The Situation Analyzer enables autonomous action — agents decide based on situations, not scripts

What UCL Delivers

Value | Outcome |

One KPI truth | End disputes — same definition everywhere |

Grounded context | Block hallucinations — evaluated Context Packs |

Autonomous copilots | Situation analysis enables decision-making |

Closed-loop activation | Insights flow back — governed, reversible, auditable |

Process-tech fusion | ERP + process signals joined — same-day RCA |

Meta-Graph reasoning | LLMs traverse metadata — blast-radius scoring |

The Architecture

Eight architectural patterns — ingestion, connected DataOps, StorageOps, semantic layer, Meta-Graph, process fusion, consumption structures, and situation analyzer — form a substrate that works with existing tools. No rip-and-replace. UCL extends Snowflake, Databricks, Power BI, dbt, AtScale — adding governance, context engineering, and activation.

The seven-layer stack implements these patterns. The four-plane view reveals their functional organization — with Pattern 8 as THE GATEWAY between agents and the governed substrate.

UCL Enables; Copilots Act

UCL is substrate, not application. It provides governed context, situation analysis, and activation infrastructure. Agentic copilots sit on top and implement reasoning, decisions, and action-ization. UCL is the stage, the governed context, and the safety net. Copilots are the actors.

The Path Forward

Start with quick wins: governed KPIs, prompt-native BI, Context Packs with evaluation gates, activation guardrails. Build the substrate incrementally. Returns compound across consumption models as the substrate matures.

The Transformation

With UCL, context transforms from byproduct to product. Heterogeneous sources unify. Metadata enables reasoning. Consumption models share semantics. The loop closes from signal through governed context through copilot reasoning through governed activation to evidence.

And with UCL's Situation Analyzer — THE GATEWAY — copilots transform from script-followers into situation-responders. They don't just surface insights — they understand context, decide what to do, and act through governed channels.

Without UCL, agents follow scripts. With UCL, situation analysis enables governed, autonomous action.

The last mile finally connects.

For the mathematical foundation behind cross-graph discovery and the compounding moat — including formal shape-checked equations, worked examples, and 36-month simulation validation — see Compounding Intelligence: How Enterprise AI Develops Self-Improving Judgment. For the ROI framework showing how the four layers compound, see Gen-AI ROI in a Box. For the production architecture, see The Enterprise-Class Agent Engineering Stack.

Arindam Banerji, PhD

Comments