top of page

The AI Re-Think Blog

RAG-MCP: Taming Tool Bloat in the MCP Era

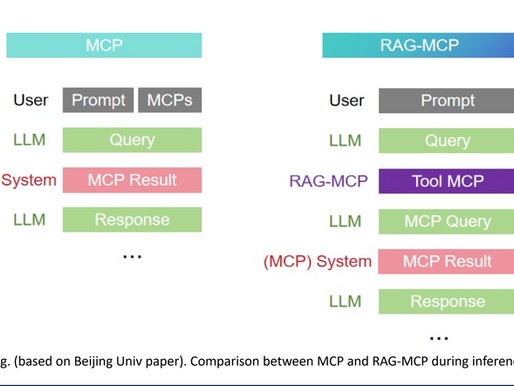

Design and evaluation of a retrieval-driven MCP selector for large tool registries Paper: RAG-MCP: Mitigating Prompt Bloat in LLM Tool Selection via Retrieval-Augmented Generation Executive Summary RAG-MCP addresses a critical scalability challenge facing modern LLM systems: the "prompt bloat" problem that emerges when large language models must select from hundreds or thousands of external tools. The paper introduces a Retrieval-Augmented Generation framework that dynamical

Arindom Banerjee

Nov 2212 min read

Self-Improving Agent Systems: Technical Deep Dive

AgentEvolver and the Paradigm Shift Toward Autonomous Agent Evolution A Technical Analysis for Advanced Practitioners Executive Summary AgentEvolver represents a fundamental shift in agent training methodology, moving from expensive human-curated datasets and sample-inefficient reinforcement learning to autonomous, LLM-guided self-evolution. Released by Alibaba's Tongyi Lab in November 2025, the system demonstrates that 7-14B parameter models can outperform 200B+ models when

Arindom Banerjee

Nov 179 min read

Report: Economic & Industrial Impact of “Attention Is All You Need” (Vaswani et al., 2017)

1. Thesis The 2017 transformer paper created a single, massively parallelizable architecture for sequence/language that (i) made scale itself a performance strategy, (ii) generated a compute-hungry workload that fit GPU roadmaps perfectly, and (iii) gave hyperscalers and new AI labs a board-level story to fund multi-trillion-dollar AI data centers. The measurable, observable part of this “transformer dividend” is about $8.6–8.7T today, and with modestly looser attribution it

Arindom Banerjee

Nov 25 min read

bottom of page