Production AI That Delivers Consistent KPI-Backed Outcomes

- Arindom Banerjee

- 2 days ago

- 13 min read

A governed, self-improving AI ROI engine embedded in your operational workflows (not a sidecar pilot).

Executive Summary

Ninety-five percent of GenAI pilots never reach reliable production. The root cause is not model quality—it's the absence of a governed, self-improving operating layer that connects AI actions to business KPIs. This document introduces Production AI: a six-pillar platform combining Graph-RAG knowledge fabric, AgentEvolver runtime, and a shared evaluation harness that runs on your existing stack. The result is 30–60 day time-to-production for governed use cases, ~2× higher success rates than DIY approaches (MIT 2025), and an audit-ready evidence trail that finance and risk can trust. We start with quick wins, prove impact in weeks, and scale as a portfolio—not a collection of disconnected pilots.

1. The Problem: AI Spend Is Up, Production ROI Is Flat

The numbers are stark:

95% of GenAI pilots fail to reach reliable production

42% were abandoned or paused in 2025 (~2.5× year-on-year)

$500B+ in spend and risk tied up in stalled programs, drift, and overruns

MIT 2025 finding: Integrated platforms achieve ~67% success vs ~33% for DIY

Enterprises have raced into AI and GenAI. But for most, value is trapped in pilots because production AI is not "a model problem"—it's an operating-system problem.

What's Happening (The Observable Failure Mode)

AI capability is improving fast, but reliability, repeatability, and auditability are not. Most deployments stall in one of three dead zones:

Pilot gravity: PoCs don't survive the first hard incident, audit question, or ownership change.

Sidecar syndrome: Assistants live next to operations (chat), not inside the flows where value is created (ERP/ITSM/CRM/workflow).

Governance debt: Each team invents their own rules, metrics, and safety boundaries—so scaling multiplies risk and cost.

Why It Stalls (Root Causes)

Enterprises typically fail across nine capability gaps spanning fabric, lifecycle, and trust. The most common root causes:

Root Cause | What Breaks |

Fragmented context and signals | Data lake, metrics, tickets, logs, and vector stores aren't one governed fabric—answers "sound right" but don't reconcile |

Model-centric thinking | Teams optimize prompts and agents but don't engineer the end-to-end loop: signals → evals → actions → verification → evidence |

Weak evaluation and rollback | Drift detected late (if at all); promotion rules informal; rollbacks manual; no credible evidence trail |

No way to write value back | Insights exist but don't drive governed actions in systems where work runs (ITSM, ERP, CRM) |

Bottom line: Most AI programs behave like experiments around the edges—not a reversible AI ROI engine that executives can fund with confidence.

[GRAPHIC: Slide 6 — "Nine Capability Gaps Blocking Production AI"]

2. Our Answer: A Governed AI & Intelligent Automation Layer on Your Stack

We do not propose "yet another platform" that replaces your warehouse, observability, MLOps, or workflow investments. The fastest route to ROI is the opposite: reuse what you already run, but add the missing production layer that makes it all work together.

Production AI is a governed layer that:

Unifies context — assistants, agents, and BI use the same KPI and entity truth

Runs the full loop — signals → evals → governed actions → verification → evolution (the "AgentEvolver loop")

Proves impact — evidence packs + KPI rollups that finance/risk can trust

Operationalizes outcomes — writes back into systems of record where value is realized

Two Deployment Modes (Same Principles, Different Hosting)

Inside your estate: Tightly integrated services running over your existing data, ML, observability, and workflow platforms.

Managed runtime (PaaS-style): We host the core runtime + evaluation harness while connecting to your data, systems of record, and observability.

The Core Architectural Idea

One Graph-RAG knowledge fabric + one AgentEvolver runtime loop, wired into your operational systems, with evaluation and evidence built in.

[GRAPHIC: Slide 22 — "One Context Spine — UCL + KG/Graph-RAG + Runtime"]

That combination turns AI from "a collection of assistants" into a repeatable capability:

Governed promotions and rollbacks

Shared SLOs and safety boundaries

Continuous improvement without chaos

Audit-ready proof tied to KPI movement

The Integration Multiplier

When these components work as an integrated system (not bolted-on tools), the combined effect is significant:

[GRAPHIC: Slide 10 — "Impact by Capability — How Governed, Self-Improving AI Pays Back"]

Metric | Improvement |

Success rate | ~2× vs ad-hoc stacks |

Time-to-value | 40% faster |

Maintenance effort | 50% lower |

Audit coverage | 100% |

Plus a single AI ROI scorecard that finance, risk, and ops all trust.

[GRAPHIC: Slide 30 — "What This Platform Guarantees — And How We Prove It"]

3. Why the Six Technology Pillars Make the Shift Real (Not Theoretical)

[GRAPHIC: Slide 17 — "Technology Highlights — What Makes the Platform Work"]

Across industries, the firms that consistently get value from AI don't just "have models." They invest in six specific capabilities that show up again and again in the literature and in real deployments: graph-aware context layers, governed runtimes, evaluation & guardrails, signal fabric, intelligent automation, and fit-for-purpose platforms. This stack is what turns scattered pilots into a repeatable, governed AI ROI engine.

Below we summarize each pillar, why it matters, and what real-world data suggests about impact.

3.1 Graph-RAG Knowledge Fabric (UCL + KG + Graph-RAG)

What it does. Creates a unified context layer over tickets, logs, KBs, metrics, and master data using a domain knowledge graph and Graph-RAG. This gives assistants and agents explainable, multi-hop retrieval instead of "bag-of-chunks" search, and allows you to enforce consistent KPI and entity semantics across use cases.

Why it matters. Most of today's GenAI failures trace back to bad or missing context—wrong schema, missing joins, stale or conflicting definitions. A graph-aware fabric lets you bind KPIs and entities to contracts (revenue, OTIF, MTTR, etc.) and reuse those semantics everywhere: support copilot, change-risk agent, quarter-close analyst.

Why we know it moves the needle. Across industries, KGs and Graph-RAG have delivered ~3.4× answer accuracy vs. vector-only RAG with large hallucination reductions (Microsoft GraphRAG benchmarks), and up to ~5× faster queries with ~40% lower infra cost in graph+vector performance tests (Neo4j / NVIDIA-style benchmarks). LinkedIn reports ~62% lower median issue-resolution time after moving support to a ticket knowledge graph, while supply-chain KGs at firms like Scoutbee and Walmart report ~75% faster supplier discovery and real-time risk monitoring over 100k+ suppliers. Our fabric is designed to industrialize exactly this pattern across your estate, not just in a single pilot.

3.2 AgentEvolver Runtime (Self-Improving Loop)

What it does. Provides a shared, governed loop: signals → evals → governed actions → verification → evolution, with roll-forward / rollback semantics. It routes work across agents, tools, and playbooks, and logs how skills change over time.

Why it matters. Most enterprises today have flows but not a runtime: each bot or copilot has its own ad-hoc logic, no shared SLOs, and no clear place where "safe to act" decisions are made. A cross-workflow runtime turns AI from one-off automations into an operating discipline that can be measured, tuned, and audited. This is aligned with the emerging discipline of agent engineering: designing agents with explicit tools, evals, safety policies, and feedback loops so they can be deployed and improved like production software, not one-off scripts.

Why we know it moves the needle. Production engineering patterns (Netflix "Managed Delivery," Uber's Michelangelo, DoorDash's Model Orchestration, etc.) show that shared runtimes yield 2–4× faster rollout cycles and order-of-magnitude drops in rollback time compared to bespoke pipelines. MIT's 2025 analysis of integrated vs DIY AI stacks reports ≈67% success for integrated platforms vs ≈33% for DIY, largely because they embed closed-loop governance and shared infra instead of repeating it per project. Our runtime aims to give you those same economics, but focused on governed AI actions, not just model deployment.

[GRAPHIC: Slide 21 — "Agent Engineering on the Production-AI Runtime"]

3.3 Eval, Guardrails & Evidence Harness

What it does. Provides a unified evaluation harness (LLM judges, regression suites, synthetic tasks), policy-as-code guardrails (SoD, safe-write boundaries, blast-radius rules), and an evidence ledger that ties every governed action to tests, approvals, and KPIs.

Why it matters. Without systematic evals and guardrails, AI remains "hero-engineer theater": a few experts debug prompts and dashboards, but finance, risk, and regulators see anecdotes instead of audit-ready proof. A common harness lets you do three things that are otherwise very hard: (1) quantify correctness and drift across use cases; (2) wire business KPIs (MTTR, CSAT/XLA, leakage, unit cost) into promotion/rollback; and (3) ship evidence packs instead of PDFs when someone asks "how do we know this is safe?"

Why we know it moves the needle. Recent production stories show that LLM-first eval harnesses change the economics of AI quality and ROI:

DoorDash reports ≈98% faster evaluation turnaround and ≈90% hallucination reduction after moving to AutoEval + LLM judges for content and workflow evaluation.

Shopify Sidekick improved agreement between human and automated evals from Cohen's κ ≈0.02 (random) to ≈0.61 (near-human) via iterative LLM-judge calibration, making automated evals trustworthy enough to gate deployment.

Teams like Echo AI / Log10 describe 20-point F1 improvements while cutting prompt-optimization work from days to under an hour when eval harnesses are wired directly into the iteration loop.

3.4 Signals, Telemetry & Drift Fabric

What it does. Normalizes logs, KPIs, alerts, and feedback into a high-signal metric and event fabric with drift checks, SLO monitors, and anomaly detectors feeding the AgentEvolver loop and the Graph-RAG fabric.

Why it matters. Most monitoring today is tool- and team-siloed; AI incidents are spotted late (via Twitter, CFO complaints, or auditors) rather than via first-class signals. A unified signal fabric lets you declare "what good looks like" (latency, error rates, correctness bands, business deltas) and wire those conditions into promotion, rollback, and escalation policies.

Why we know it moves the needle. Observability leaders (Datadog, Honeycomb, New Relic, etc.) and SRE literature show that rich, centralized telemetry yields 25–60% faster incident response and major reductions in false-positive noise when coupled with SLOs and runbooks. Honeycomb, for example, reports 94% query success and >5× higher retention (≈26.5% vs 4.5%) for users of their AI-assisted Query Assistant, precisely because telemetry and AI are wired into a closed loop. We extend this pattern from infra into AI behavior and business KPIs, driving faster, safer learning.

3.5 Intelligent Automations & Agent Packs

What it does. Provides pre-built, graph-aware agents and runbooks for core scenarios—Ship AI Safely, governed incident loops, cost-optimization, support copilots, quarter-close analyzers—each wired to signals, guardrails, and evidence packs.

Why it matters. Most enterprises don't suffer from a lack of ideas; they suffer from integration and safety debt. Starting with proven patterns reduces the risk that every use case becomes a bespoke science project. Agent packs also make it easier for operations teams to understand and own automations because they ship with KPIs, policies, and "knobs," not opaque code.

Why we know it moves the needle. Case studies from workflow / automation platforms (UiPath, ServiceNow, n8n, Zapier-for-Ops patterns, etc.) consistently show 30–70% manual-work reduction and months-not-years payback when automations are shipped as reusable packs instead of DIY bots. Public n8n stories, for example, highlight >60% reductions in repetitive ops work and rapid iteration on new flows once a shared automation backbone exists. Our agent packs follow that pattern but add graph-aware context and explicit safety/ROI budgets, so they can be trusted in production, not just used for back-office tasks.

This is also where quick wins with tools like n8n show up: we can take existing n8n-style flows (for onboarding, approvals, provisioning, notifications, simple reconciliations, etc.), wrap them with graph-aware context and guardrails, and promote them into governed agent packs. That gives you visible reductions in repetitive work and cycle times in weeks, while exercising the same runtime, eval harness, and evidence patterns that will later support more complex automations.

3.6 Operational Platforms & Systems of Record

What it does. Treats your existing platforms—Snowflake / BigQuery / Databricks, Bedrock / Azure OpenAI / Vertex, ServiceNow / Jira / SAP / Salesforce, observability and event platforms—as the execution substrate. The Graph-RAG fabric and AgentEvolver runtime read/write via governed connectors; systems of record remain the "source of truth."

Why it matters. The economics of AI productionization break down if value requires rip-and-replace. By sitting "over" your stack instead of "beside" or "instead of" it, the runtime can (a) reuse existing controls and lineage, (b) show KPI and ROI impact directly in systems leaders already trust, and (c) avoid the procurement and change-management drag of yet another platform.

Why we know it moves the needle. Industry analyses (e.g., MIT 2025 integrated-stack study; multiple Forrester TEI reports for AI platforms integrated with existing clouds) show that integrated, over-the-top AI layers achieve faster time-to-value and >2× higher project success rates than isolated tools, precisely because they "meet enterprises where they are"—reusing identity, data, and governance—while adding the missing self-improvement and evaluation capabilities.

4. Use-Case Patterns and "Day in the Life" Impact

Instead of selling an abstract "AI platform," we anchor Production AI in repeatable production patterns—the workflows where enterprises either (a) get compounding AI ROI or (b) stall in pilot purgatory. Each pattern is designed to be proven in 30–60 days and then scaled as a portfolio, because the same AgentEvolver loop repeats across domains.

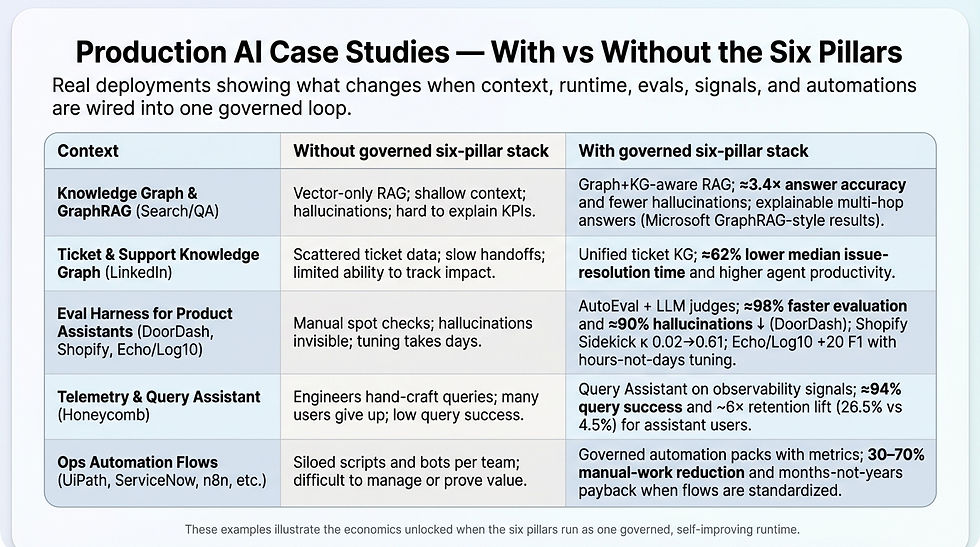

[GRAPHIC: Slide 12 — "Production AI Case Studies — With vs Without the Six Pillars"]

Pattern 1 — Ship & Automate Safely (Change & Release Guardrails)

What this pattern governs:

Model promotions (forecasting, personalization, anomaly models)

Prompt/agent changes (new tools, new retrieval sources, new policies)

Workflow automations that can move money / inventory / customer outcomes

Underlying infra changes that materially affect AI behavior

Anchor quick-win lines (supply chain + retail):

Supply chain: Demand-sensing / ETA / allocation logic updates; supplier-risk scoring; price-variance guardian rules

Retail: Pricing/promo model updates; search/ranking changes; promotion leakage controls

Before (Typical Failure Mode) | After (Production AI Behavior) |

One-shot cutovers, manual approvals, inconsistent gates | Canary + staged rollout, blast-radius controls, SoD approvals |

"Rollback" = scramble; "audit" = after-the-fact storytelling | Automatic rollback under explicit policy triggers |

Production incidents become the test suite | Policy-as-code gates: "no eval pass, no promotion" |

Evidence scattered across tools; impact on KPIs hard to prove | Every change emits an evidence pack (what changed, why, what it passed, what KPIs it's allowed to move) |

"Prove-it" outcomes (what execs can hold you to):

<10-minute rollback for risky changes

≥98% safe-change success rate on promotion gates

30–60 days time-to-production for governed AI changes (not a one-off PoC)

Pattern 2 — Run & Support Better (Ops + Self-Improving Signals)

What this pattern operationalizes:

Real-time "sense → decide → act → verify" loops

Assistants wired into tickets, exceptions, and actions—not sidecar chatbots

Continuous improvement based on outcomes (did the action fix the KPI, or just close the ticket?)

Anchor quick-win lines (supply chain + retail):

Supply chain: OTIF exception triage and mitigation; invoice/GR exception automation (reconcile → fix → post → audit pack)

Retail: Stockout avoidance (signals → allocation actions → verify shelf availability); returns fraud + chargeback triage

Before (Typical Failure Mode) | After (Production AI Behavior) |

Noisy alerts, manual investigations, fragile playbooks | Signal→action pipelines that cut false positives and shorten decision loops |

Assistants hallucinate or can't explain why an answer is correct | Graph-RAG–backed assistants: grounded, multi-hop, explainable |

Escalations and rework dominate; cost-per-incident drifts upward | Cost guardrails tied to unit economics (per-SKU, per-order, per-ticket), not just "cloud spend" |

"Prove-it" outcomes:

First-5-minute resolution ≥80–85% for targeted lines

MTTR reduction 50–90% where workflows are truly closed-loop

AI/cloud unit-cost reduction 20–40% via explicit budgets + guardrails

Pattern 3 — Prove Value & Scale (Evidence + Continuous Learning)

This is the pattern that stops "one impressive deployment" from turning into ten bespoke, un-auditable snowflakes.

Before (Typical Failure Mode) | After (Production AI Behavior) |

Dashboards without action; pilots without promotion rules | Quality-gated plays tied to KPI deltas (baseline, target, guardrails, failure modes) |

Finance sees "usage," not P&L-linked deltas | An "ROI ledger" that links: decision → action → verification → KPI impact → evidence trail |

Risk can't sign off because controls and provenance are unclear | A governed ladder: PoC → canary → production, with explicit promotion criteria |

"Prove-it" outcomes:

100% of live use cases have ROI tracked (by workflow, not by model)

≥90% workflow coverage in production for the selected domain within Q1–Q2, because the runtime is reusable

"Day in the Life" Storyboards

The deck's storyboards are not decoration—they are operating proof that the loop is closed:

Below is an example of how production-ai makes AI assistants fast, effective/useful:

Slide 15 shows how assistants become operationally trustworthy when they can retrieve, act, verify, and learn with evidence.

5. How We Engage: Quick Wins First, Then an AI ROI Engine

[GRAPHIC: Slide 15 — "Quick Wins Portfolio — Where We Start in 30-60 Days"]

We lead with engineering and outcome accountability. We do not sell "months of platform setup." We ship governed plays that land in real systems of record and produce evidence that technical business executives can trust.

1) Quick-Scan Diagnostic (0–2 weeks)

Goal: Decide what to prove first—and what must be true for proof to be credible.

What we do:

Baseline your current stack and operating reality (signals, workflows, controls, ownership)

Identify where UCL-like context already exists (semantic layers, KPIs, process-mining outputs, gold tables, curated logs)

Locate the "last mile" gaps: where insights fail to become actions, or actions aren't verified

What you get:

A prioritized quick-wins portfolio (we lead with supply chain + retail lines)

Target KPIs + success criteria per line (what "better" means, how it's measured)

A first-pass "evidence pack" definition (what artifacts will satisfy CFO/CISO scrutiny)

2) Governed Quick-Win Sprint (30–60 days)

Goal: Ship 1–2 plays that are production-shaped from day one.

What we stand up:

Runtime loop + eval harness + evidence outputs on your estate (or as a managed runtime where needed)

Policy gates appropriate to the line (SoD, rollback policy, data provenance, audit artifacts)

What we deliver:

1–2 governed plays, typically chosen from:

Ship & Automate Safely (guardrails + rollback + evidence for risky changes)

Run & Support Better (closed-loop exception handling, not just "assistant chat")

Prove Value & Scale (ROI ledger + promotion criteria so it doesn't die after the demo)

Each play ships with KPI baselines, SLOs, eval schemas, and Q1–Q2 impact targets—plus the evidence pack.

3) Runtime Subscription & Operate-and-Scale Assist

Goal: Turn "one win" into a compounding engine.

What this includes:

Keep the knowledge fabric + runtime loop running in your estate

Onboard additional plays across supply chain and retail (and ITOps as a third supporting line)

Co-managed operation: tune evals, grow the graph/context, expand automation packs, harden guardrails

Optional value-share aligned to measurable outcomes (deflection, MTTR, margin leakage reduction, working capital / exception cost reduction)

Why this compounds: Every new play benefits from the same six pillars (context + runtime + eval + signals + automation + systems-of-record integration). Improvements in one area lift the others. That's the difference between a "portfolio engine" and a collection of demos.

6. Who This Is For

This abstract (and the companion deck/report) is aimed at technical business executives and the engineering leaders they trust:

Audience | Core Pain Point |

Technical CIOs / CDOs / Heads of AI & Platforms | Being asked to justify AI/cloud spend with outcomes, not optimism—need a disciplined path from pilots to P&L impact |

Operations Leaders (Supply Chain & Retail) | Exception cycle time, OTIF recovery, margin leakage, stockout reduction, returns/chargeback loss—and "action actually happened" verification |

Architects & Reliability Leaders | Already have warehouses/lakehouses, observability, MLOps, ITSM/workflow tools—want an overlay that makes AI safe, measurable, and auditable on that existing estate |

CFO / CISO Stakeholders | Don't want promises—want evidence: promotion gates, rollback attestations, provenance, control logs, and an ROI ledger tied to KPIs |

The Promise Is Deliberately Concrete

Within one or two quarters, you should be able to point to:

Specific supply chain + retail workflows where governed, self-improving AI is moving KPIs, and

An evidence trail that finance, risk, and regulators can audit without heroic effort.

7. Next Steps

To evaluate fit for your architecture and identify integration candidates:

Technical architecture review — Map the six pillars against your current stack (data platform, observability, workflow/ITSM, ML infrastructure) to identify reuse points and integration gaps.

Quick-Scan Diagnostic (0–2 weeks) — A lightweight technical assessment that produces:

Current-state inventory: existing semantic layers, signal sources, workflow endpoints, and control surfaces

Gap analysis: where context is fragmented, where actions aren't verified, where evidence trails break

Candidate use-case shortlist with integration complexity and KPI baseline estimates

Pick a 30-day pilot — Choose one to prove the loop on your stack:

LLMOps/MLOps platform setup: Stand up the governed runtime (eval harness, promotion gates, rollback policies) integrated with your existing ML infrastructure and CI/CD

AgentEvolver demo in your context: Deploy the self-improving loop on one of your workflows—signals → evals → governed actions → verification → evolution—using your data and systems of record

LLM/ML Observability for business metrics: Wire model and agent telemetry to business KPIs (not just latency/tokens), with drift detection and SLO-based alerting tied to outcomes you already track

Contact: Arindam Banerji, PhD

Comments